|

|

|

|

Non-Stop Traveling Technology for Moon Rovers |

|

|

|

|

|

|

|

|

|

|

|

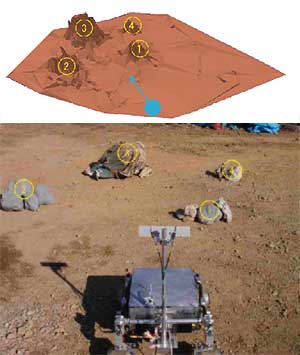

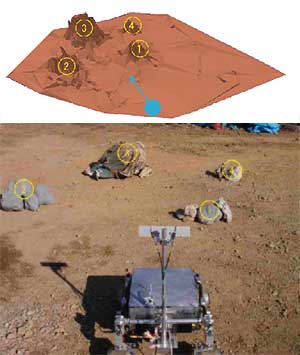

The fourth model of Micro5 "TOURER" is under testing of non-stop running algorithms - TOURER (Testbed for Unknown/Unstructured Terrain Roving and Engineering Research) is designed and constructed for testing high-level navigation technologies for "faster move." TOURER is equipped with a stereo vision system called RSV (Real-time Self-localizing Vision), inertial sensors, odometries, etc. It is driven by four networked processors with two vision DSPs. |

|

|

Upcoming moon mission in Japan is expected that robotic surface vehicles called as "moon rovers" explore the moon surface widely with sampling and examining rocks within very short mission term. The rovers have to travel at least 1 km within 10 earth days because of the mission term on the moon surface is limited during a moon day-time. This means the rovers have to move 5 to 10 times faster than JPL/NASA's Mars Exploration Rovers (MER) did. The key issue is "NON-STOP" movement. We have tried to solve this problem in combination with autonomy and human intervention with the new communication strategy between the robot on the moon and human operator on the earth. The theory has been examined by experiments with a testbed rover "The Micro5 TOURER" shown in the picture on the right. You can see a demonstration video by clicking the picture.

|

|

|

Yoji KURODA, Masahide KAWANISHI, and Mitsuaki MATSUKUMA, "Tele-Operating System for Continuous Operation of Lunar Rover", Proc. of 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), CD-ROM, 2003. |

|

Y.KURODA, M.KAWANISHI, M.MATSUKUMA, Y.KUNII, T.KUBOTA, “Tele-Driving System for Nonstop Traveling of Lunar Rover and Its Field Experiment”, Proc. of the 7th International Symposium on Artificial Intelligence, Robotics and Automation in Space (i-SAIRAS'03), CD-ROM, 2003. |

|

SELENE-B Pamphlet (provided by JAXA, low resolution 350KB) |

|

SELENE-B Pamphlet (provided by JAXA, high resolution 13MB) |

|

|

|

|

|

|

Vision-based Localization & Mapping |

|

|

|

|

|

|

|

|

|

|

| To know where is now on - is one of essential problems for autonomous robotic vehicles which explore unknown terrain. RSV (Real-time Self-localizing Vision) system can find and track more than sixteen features in same time, and calculate its position accurately no more than two persent of its movement. RSV can also available to use for indoor or town area applications since it can omit the influence of moving objects. The upper image is a measured and recreated geometrical map. |

|

To find robots' position by themselves is an essential problem but it's not quite easy to solve. GPS does not always work well where the sky is not widely opened, e.g., in woods, mountain side, buildings nearby or inside, or among the crowd of people. On the otherhand, dead-reckoning using odometry in the natural field accumlates integration errors extreamly. In order to solve this problem, we have developed a "RSV : Real-time Self-localizing Vision" system. RSV can find its relative position from where it was in the begining without any information (maps) beforehand, and it automatically utilizes many feature points on the ground to calculate its position accurately. RSV can also be applicable even where in the crowd of people since it also has a function to remove influence of moving objects.

RSV can not only find its position but also make geometrical map simultaneously. The map can be extended so as to mosaic many maps taken at each location.

|

|

|

*patent pending |

|

|

|

|

|

|

|

|

Accurate Localization on the Moon |

|

|

|

|

|

|

|

|

|

|

|

Y.KURODA, T.KUROSAWA, A.TSUCHIYA, T.KUBOTA, “Accurate Localization in Combination with Planet Observation and Dead Reckoning for Lunar Rover”, Proc. of 2004 IEEE International Conference on Robotics and Automation (ICRA 2004), CD-ROM, 2004. |

|

Y.KURODA, T.KUROSAWA, A.TSUCHIYA, S.SHIMODA, T.KUBOTA, “Position Estimation Scheme for Lunar Rover Based on Integration of the Sun and the Earth Observation and Dead Reckoning", Proc. of the 7th International Symposium on Artificial Intelligence, Robotics and Automation in Space", CD-ROM, 2003. |

|

|

|

|

|

|

Mobility System for Planetary Rovers |

|

|

|

|

|

|

|

|

|

| The Micro5 series - are equipped with PEGASUS five-wheel-drive suspension system. The first model shown in above picture is made of composites materials, has only 5 kg in mass in total (excluding science sensors). And it can be driven by electrical power generated from on board solar sells. It can climb up 15 cm hight of step, and 40 deg of slope. In the picture, the right body tilted almost 50 degrees. |

|

We have developped and proposed a suspension system called "PEGASUS : Pentad Grade Assist Suspension" for up comming Japanese moon rover mission. PEGASUS has superb performance of mobility as well as rocker-bogie suspension, with very simple mechanism called "Only-One-Joint" architecture. PEGASUS is a mechanism which is able to climb up step-alike terrain having no relation with its wheel diameter within low energy consumption.

Micro5 series which are testbeds/ prototypes of micro planetary rovers have been developed with PEGASUS as their mobility systems. Some versions of Micro5 are under testing on variety types of terrain environments. You can see a result of the research in a video.

|

|

|

Y.Kuroda, T.Teshima, Y.Sato, T.Kubota, "Mobility Performance Evaluation of Planetary Rover with Similarity Model Experiment", Proc. of IEEE International Conference on Robotics and Automation (ICRA'04), CD-ROM, 2004. |

|

Y.KURODA, Y.KUNII, T.KUBOTA, “Proposition of Microrover System for Lunar Exploration”, Journal of Robotics and Mechatronics, Vol.12, No.2, pp.91-95, 2000. |

|

Y.Kuroda, Koji Kondo, Kazuaki Nakamura, Yashuharu Kunii, and Takashi Kubota, "Low Power Mobility System for Micro Planetary Rover Micro5", Proc. of the 5th International Symposium on Artificial Intelligence, Robotics and Automation in Space (i-SAIRAS'99), pp.77-82, 1999. |

|

|

|

Micro5 on ISAS/JAXA's web page |

|

|

|

|

|

Synthetic World: an Incubator for Intelligent Robots

|

|

|

|

|

|

|

|

| The Synthetic World - is a technique to make robots intelligent with controling the complexity of the world where the robots sense. The synthetic world is a kind of hardware-in-the-loop tests. |

|

|

|

|

| An autonomous underwater vehicle swam in a synthetic world - The vehicle senses her world with her sensors, i.e., multichannels ultrasonic ranging finders (sonars), flowmeters, inertial sensors, and compass. No vision sensor was used in this experiment. By swapping some of sonar data with those of virtually created, the vehicle swam with avoiding collision against "virtual obstacles." You can see some arrows in the picture are visualized images of sound of sonars, arrows pointed to wall are the really measured, those pointed to obstacles are virtually created. Note that this picture is a product of post processes. |

|

"The robots are not things to create

but ones to bear and train."

The synthetic world is designed under this policy. The synthetic world is created in combination of the worlds of the real and virtual. The robots (and living things, too!) sense the information of surroundings only via their sensory information. So, if we created appropreate sensory information artificially for them, they would sense the information as the world where they are. When we create all of sensory information to provide, it is so called "simulation." The simulation is good for very begining of development because of ease to handle, but to make robots sofisticated needs more realistic information rather than simulation could do. On the other hand, the real world may be too complex (in natural field), or too simple (in labs, test fields or test tanks). The synthetic world approach is to solve these problems. The most of all sensory information should be real things (dynamics measured via inertial sensors, range data from lasors or sonars, images from vision sensors, etc), and some of others - which are usually key for improving the robots' intelligence - should be added to make the world be appropreate for training the robots.

The consept has been realized as software called "MVS (Multi-Vehicle Simulator)" which was a part of my Ph.D thesis at the Institute of Industrial Science, the University of Tokyo. The MVS is a real-time, multi-platform software system with network-distributed architecture. You can see some related works in here.

|

|

|

Y.Kuroda, K.Aramaki, T.Ura, "AUV Test using Real/Virtual Synthetic World", Proc. of IEEE Conf. on Autonomous Underwter Vehicle Technology '96 (AUV96), pp.365-370, 1996. |

|

Y.KURODA, K.ARAMAKI, T. URA, H. YAMATO, T.SUGANO : "ネットワーク分散処理による海中ロボット用海中環境シミュレータ", Journal of the Society of Naval Architects of Japan, Vol.178, (1995.12), pp.667-674, in Japanese |

|

Y. KURODA, T. URA : "複数海中ロボットに対応する行動決定機構", Journal of the Society of Naval Architects of Japan, Vol.177, (1995.5), pp.447-455, in Japanese |

|

Y.Kuroda, T.Ura, "Vehicle Control Architecture for Operating Multiple Vehicles", Proc. of IEEE Symp. on Autonomous Underwater Vehicle Technology '94, (1994.7), pp.323-329 |

|

|

|

|

|

|

Solar-Powered Autonomous Vessels |

|

|

|

|

|

|

|

|

|

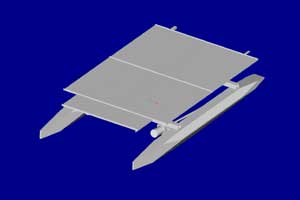

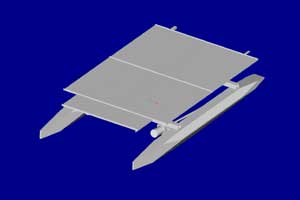

| The fouth model of TRIUMPH series - The TRIUMPH-4 is an ASURV testbed for research of autonomy on the surface of water. It has four meter long, two meter wide, and 150 kg in displacement. The solar cells take out 800 watts of electricity in maximum. It can cruise up to 4 knots. |

|

In order to investigate ingredients of water on surface of ocean or lake, small autonomous surface vehicles (ASURV) might be potentially great tools. The prediction of outbreaks of red tide is usually difficult even if remote sensing from satellites could be utilized. And, it is not good idea that many buoys deployed priory. The formation cruising of many small ASURVs could find symptoms of outbreaks earlier, and survey a part of ocean more in detail selectively.

The research includes following topics:

- Navigation & Control

- Vision based collision avoidance

- Tele-operation & monitoring

- Strategy for activity vs. energy consumption

- Formation cruising & Sworm Intelligence

- Optimal system design

- Education for Undergrads

|

|

M.KUMAGAI, T.URA, Y.KURODA, R.WALKER: "A new autonomous underwater vehicle designed for lake environment monitoring", Advanced Robotics, Vol.6, No. 1, pp.17-26, 2002.4. |

|

Yoji KURODA, Masakazu KOMATU, Mitio KUMAGAI, and Tamaki URA, "Design and Development of Autonomous Solar-Powered Surface Vehicle for Lake Environmental Survey", Journal of Japan Society for Designe Engineering, Vol. 36, No.7, pp.317-322, 2001. in Japanese |

|

浦 環,黒田洋司,熊谷道夫:"自律型ロボットシステムを用いた有害プランクトンの監視",関西水圏環境研究機構第12回公開シンポジウム「地球上で顕在化する有害有毒プランクトンの増加」講演集, pp.92-98, 1999.7. in Japanese |

|

|

|

|

|

|

|

|

Most of all research works shown above have been done at

Autonomous Mobile Systems Laboratory, Meiji University

|

|

|

|