Long-term behavior of tokens in a case of two tokens. Without LayerNorm (left), both tokens collapse to the same point; whereas with LayerNorm (right) such a collapse would not necessarily happen. First token converges either to (0,1) or (0,-1). Assuming convergence to (0,1) for the first token, the second token converges to B, if it is initially located within the red segment.

Long-term behavior of tokens in a case of two tokens. Without LayerNorm (left), both tokens collapse to the same point; whereas with LayerNorm (right) such a collapse would not necessarily happen. First token converges either to (0,1) or (0,-1). Assuming convergence to (0,1) for the first token, the second token converges to B, if it is initially located within the red segment.

On the Role of Attention Masks and LayerNorm in Self-Attention Dynamics

The celebrated attention mechanism has proven highly effective in the architecture of transformers, which serve as the key building block of modern foundation models including large language models. Recent studies have shown that pure self-attention suffers from an increasing degree of rank collapse as depth increases, limiting model expressivity and further utilization of model depth. The existing literature on rank collapse, however, has mostly overlooked other critical components in transformers that may alleviate the rank collapse issue. In a recent work, we provide a general analysis of rank collapse under self-attention, taking into account the effects of attention masks and layer normalization (LayerNorm). The main questions we address are as follows:

We view self-attention as a discrete-time dynamical system (closely resembling the architecture used in practice) and answer these questions through a rigorous analysis, making novel use of a graph-theoretic approach and incorporating advanced results on infinite products of inhomogeneous non-negative matrices. In summary, we make the following contributions:

The celebrated attention mechanism has proven highly effective in the architecture of transformers, which serve as the key building block of modern foundation models including large language models. Recent studies have shown that pure self-attention suffers from an increasing degree of rank collapse as depth increases, limiting model expressivity and further utilization of model depth. The existing literature on rank collapse, however, has mostly overlooked other critical components in transformers that may alleviate the rank collapse issue. In a recent work, we provide a general analysis of rank collapse under self-attention, taking into account the effects of attention masks and layer normalization (LayerNorm). The main questions we address are as follows:

- Can attention masks alleviate rank collapse of tokens under self-attention? If so, what type of attention mask would be the most effective?

- Can LayerNorm alleviate rank collapse of tokens under self-attention? If so, what long-term behavior of tokens would LayerNorm lead to?

We view self-attention as a discrete-time dynamical system (closely resembling the architecture used in practice) and answer these questions through a rigorous analysis, making novel use of a graph-theoretic approach and incorporating advanced results on infinite products of inhomogeneous non-negative matrices. In summary, we make the following contributions:

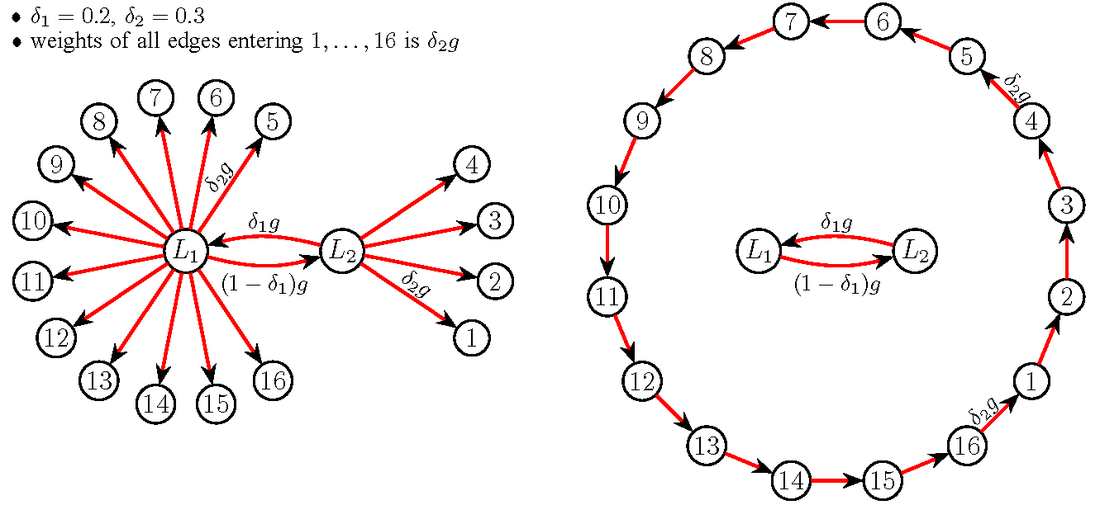

- We establish that with pure self-attention, the exponential convergence of tokens to a common representation holds for a broad class of attention masks, accounting for the causal mask and a wide class of sparse attention patterns such as the sliding window. The key property that leads to the exponential rank collapse is a token that serves as a common “context” for all the other tokens in the sequence to directly or indirectly attend to. Our results also show that local attention can slow down the rate of rank collapse, suggesting its potential advantage over full bidirectional attention from an expressivity perspective at finite depth.

- We show that with LayerNorm, when the value matrices are orthogonal, the exponential convergence of tokens to a common point on the unit sphere holds for a broad class of attention masks. Nonetheless, by constructing nontrivial counterexamples, we prove that the self-attention dynamics with LayerNorm can simultaneously have a rich set of equilibria of any rank ranging from one to full. Moreover, we rigorously establish that self-attention with LayerNorm, together with proper choice of value matrices, can provably prevent collapsing to a rank one subspace for a generic class of input token sequences.

- On the role of attention masks and LayerNorm in self-attention dynamics, with X. Wu, Y. Wang, S. Jegelka, and A. Jadbabaie, Advances in Neural Information Processing Systems (NeurIPS), 2024, under review.

Learning from Sparse Belief Samples

A key behavioral assumption in many approaches to non-Bayesian social learning is that agents are capable of repeatedly communicating their full belief distributions with their peers. Decision makers, however, in large populations are likely not to satisfy such a cognitive demand, given the limited/costly communication and information processing resources. Motivated by such limitations, we pose the question as to whether almost sure learning is achievable if agents are only allowed to communicate samples from their beliefs.

We provide a definite positive answer to this question by proposing a belief update mechanism that can achieve learning with probability one in a setting where agents only communicate samples from their beliefs, assuming a strongly connected network and a “collective distinguishability” assumption, which are both required for learning even in full-belief-sharing settings. In our proposed belief update mechanism, each agent’s belief is a normalized weighted geometric interpolation between a fully Bayesian private belief — aggregating information from the private source — and an ensemble of empirical distributions of the samples shared by her neighbors over time. By carefully constructing asymptotic almost-sure lower/upper bounds on the frequency of shared samples matching the true state/or not, we rigorously prove the convergence of all the beliefs to the true state, with probability one.

A key behavioral assumption in many approaches to non-Bayesian social learning is that agents are capable of repeatedly communicating their full belief distributions with their peers. Decision makers, however, in large populations are likely not to satisfy such a cognitive demand, given the limited/costly communication and information processing resources. Motivated by such limitations, we pose the question as to whether almost sure learning is achievable if agents are only allowed to communicate samples from their beliefs.

We provide a definite positive answer to this question by proposing a belief update mechanism that can achieve learning with probability one in a setting where agents only communicate samples from their beliefs, assuming a strongly connected network and a “collective distinguishability” assumption, which are both required for learning even in full-belief-sharing settings. In our proposed belief update mechanism, each agent’s belief is a normalized weighted geometric interpolation between a fully Bayesian private belief — aggregating information from the private source — and an ensemble of empirical distributions of the samples shared by her neighbors over time. By carefully constructing asymptotic almost-sure lower/upper bounds on the frequency of shared samples matching the true state/or not, we rigorously prove the convergence of all the beliefs to the true state, with probability one.

- Belief samples are all you need for social learning, with M. JafariNodeh and A. Jadbabaie, 63rd IEEE Conference on Decision and Control, under review.

- Social learning with sparse belief samples, with R. Salhab and A. Jadbabaie, in Proceedings of the 59th IEEE Conference on Decision and Control, 2020.

Opinion Dynamics Under Social Pressure

This project studies opinion dynamics under social pressure, where agents on a network declare opinions to each other while maintaining their hidden true beliefs; although agents adjust their declared opinions to align with their neighbors' past opinions, they retain a bias towards their true beliefs. To study this problem, we use a model based on the interacting Pólya urn model developed in a previous work by Jadbabaie et al. In this model, each agent has a bias parameter that governs the influence of their true belief on their declared opinions. Additionally, a stochastic element is introduced, with agents' declared opinions being random and sampled from a probability distribution based on their true beliefs, their neighbors' past declared opinions, and their bias parameter.

We prove that the probability distributions governing the agents' declared opinions converge almost surely and do not fluctuate indefinitely over time, using Lyapunov theory and stochastic approximation techniques. Furthermore, we identify the necessary and sufficient conditions for achieving consensus among the agents, leading them to asymptotically declare the same opinion with probability 1. We find that consensus depends on the Jacobian of the agents' parameter updates at the boundary equilibrium points. Notably, due to social pressure, consensus can sometimes be achieved even when some agents in the network hold conflicting true opinions. Our analysis determines precisely when this phenomenon occurs.

This aspect of the model, where agents converge in their declared opinions despite divergent true beliefs, raises the question of how to disentangle the effects of social pressure to estimate inherent beliefs from declared opinions. We use results on large-deviation behavior of martingales to show that, provided the history of all agents' declarations is available to the estimator, the maximum likelihood estimator will converge to the true beliefs and bias parameters of the agents almost surely (even under consensus conditions).

This project studies opinion dynamics under social pressure, where agents on a network declare opinions to each other while maintaining their hidden true beliefs; although agents adjust their declared opinions to align with their neighbors' past opinions, they retain a bias towards their true beliefs. To study this problem, we use a model based on the interacting Pólya urn model developed in a previous work by Jadbabaie et al. In this model, each agent has a bias parameter that governs the influence of their true belief on their declared opinions. Additionally, a stochastic element is introduced, with agents' declared opinions being random and sampled from a probability distribution based on their true beliefs, their neighbors' past declared opinions, and their bias parameter.

We prove that the probability distributions governing the agents' declared opinions converge almost surely and do not fluctuate indefinitely over time, using Lyapunov theory and stochastic approximation techniques. Furthermore, we identify the necessary and sufficient conditions for achieving consensus among the agents, leading them to asymptotically declare the same opinion with probability 1. We find that consensus depends on the Jacobian of the agents' parameter updates at the boundary equilibrium points. Notably, due to social pressure, consensus can sometimes be achieved even when some agents in the network hold conflicting true opinions. Our analysis determines precisely when this phenomenon occurs.

This aspect of the model, where agents converge in their declared opinions despite divergent true beliefs, raises the question of how to disentangle the effects of social pressure to estimate inherent beliefs from declared opinions. We use results on large-deviation behavior of martingales to show that, provided the history of all agents' declarations is available to the estimator, the maximum likelihood estimator will converge to the true beliefs and bias parameters of the agents almost surely (even under consensus conditions).

- Estimating true beliefs from declared opinions, with J. Tang, A. Adler, and A. Jadbabaie, IEEE Transactions on Automatic Control, conditionally accepted.

- Stochastic opinion dynamics under social pressure in arbitrary networks, with J. Tang, A. Adler, and A. Jadbabaie, IEEE Transactions on Automatic Control, under review.

Demystifying Oversmoothing in Attention-Based Graph Neural Networks

Oversmoothing in Graph Neural Networks (GNNs) refers to the phenomenon where increasing network depth leads to homogeneous node representations. While previous work has established that Graph Convolutional Networks (GCNs) exponentially lose expressive power, it remains controversial whether the graph attention mechanism can mitigate oversmoothing.

In a recent work, we provide a definitive answer to this question through a rigorous mathematical analysis, by viewing attention-based GNNs as nonlinear time-varying dynamical systems and incorporating tools and techniques from the theory of products of inhomogeneous matrices and the joint spectral radius. We establish that, contrary to popular belief, the graph attention mechanism cannot prevent oversmoothing and loses expressive power exponentially. The proposed framework extends the existing results on oversmoothing for symmetric GCNs to a significantly broader class of GNN models, including random walk GCNs and Graph Attention Networks (GATs). In particular, our analysis accounts for asymmetric, state-dependent and time-varying aggregation operators and a wide range of common nonlinear activation functions, such as ReLU, LeakyReLU, GELU and SiLU.

Oversmoothing in Graph Neural Networks (GNNs) refers to the phenomenon where increasing network depth leads to homogeneous node representations. While previous work has established that Graph Convolutional Networks (GCNs) exponentially lose expressive power, it remains controversial whether the graph attention mechanism can mitigate oversmoothing.

In a recent work, we provide a definitive answer to this question through a rigorous mathematical analysis, by viewing attention-based GNNs as nonlinear time-varying dynamical systems and incorporating tools and techniques from the theory of products of inhomogeneous matrices and the joint spectral radius. We establish that, contrary to popular belief, the graph attention mechanism cannot prevent oversmoothing and loses expressive power exponentially. The proposed framework extends the existing results on oversmoothing for symmetric GCNs to a significantly broader class of GNN models, including random walk GCNs and Graph Attention Networks (GATs). In particular, our analysis accounts for asymmetric, state-dependent and time-varying aggregation operators and a wide range of common nonlinear activation functions, such as ReLU, LeakyReLU, GELU and SiLU.

- Demystifying oversmoothing in attention-based graph neural networks, with X. Wu, Z. Wu, and A. Jadbabaie, in Advances in Neural Information Processing Systems (NeurIPS), 2023 (spotlight paper).

Subscription Networks, Verification, and Media Bias

Most individuals acquire the latest news through reports by various news media. Although there is a wealth of different news media, consumers can only focus on few of them, owing to limited time, attention, or cognitive capacity. Different segments of the society may consume vastly different news from different media sources, often without a set of commonly accepted facts or truths. News media also have their own ideological biases and are motivated to influence the public opinion. For example, they can choose to promote news that can persuade their subscribers towards their own political agenda or conceal the unfavorable information that otherwise moves their readers’ beliefs away. News publishers also play the vital role of fact-checking the information before any news release, based on their journalists’ expertise and sources. This fact checking has become increasingly important, due to the prevalence of misinformation in modern digital media platforms.

Motivated by these observations, we study how individuals choose among biased information sources with persuasion motives when the news may or may not be informative. We develop a game-theoretic model with anonymous subscribers with diverse ideological perspectives and two news media with opposing ideological perspectives. The news media are motivated to influence the public opinion through news verification and selective disclosure. In our model, each individual subscribes to a news media, and then each news media chooses whether to verify the informativeness of a private signal and whether to disclose it to its subscribers. In equilibrium, moderate subscribers subscribe to the news media with the opposing view, exhibiting anti-homophily. In contrast, extremist subscribers subscribe to the intermediary with aligned view, exhibiting homophily.

Most individuals acquire the latest news through reports by various news media. Although there is a wealth of different news media, consumers can only focus on few of them, owing to limited time, attention, or cognitive capacity. Different segments of the society may consume vastly different news from different media sources, often without a set of commonly accepted facts or truths. News media also have their own ideological biases and are motivated to influence the public opinion. For example, they can choose to promote news that can persuade their subscribers towards their own political agenda or conceal the unfavorable information that otherwise moves their readers’ beliefs away. News publishers also play the vital role of fact-checking the information before any news release, based on their journalists’ expertise and sources. This fact checking has become increasingly important, due to the prevalence of misinformation in modern digital media platforms.

Motivated by these observations, we study how individuals choose among biased information sources with persuasion motives when the news may or may not be informative. We develop a game-theoretic model with anonymous subscribers with diverse ideological perspectives and two news media with opposing ideological perspectives. The news media are motivated to influence the public opinion through news verification and selective disclosure. In our model, each individual subscribes to a news media, and then each news media chooses whether to verify the informativeness of a private signal and whether to disclose it to its subscribers. In equilibrium, moderate subscribers subscribe to the news media with the opposing view, exhibiting anti-homophily. In contrast, extremist subscribers subscribe to the intermediary with aligned view, exhibiting homophily.

- Subscription networks, verification, and media bias, with C. Hsu, M. Yildiz, and A. Jadbabaie, Review of Economic Studies, under review.

Exploiting Strategic Uncertainty for Incentive Design in Crowd-Based Markets

Strategic interactions and uncertainties are at the heart of many crowd-based platforms. Uncertainties can be at systemic level (e.g., strength of a threat/idea in a cyber-security/crowdfunding setting, average willingness-to-pay for a product, or the average reserved price of drivers in a ride-sharing platform) or can be idiosyncratic, explaining the variations at the microscopic level (e.g., differences in people's perception of threats/ideas, or heterogeneity in tastes of consumers for a product or in reserved prices of drivers in a ride-sharing platform). Such uncertainties along with the interdependencies in utilities lead to strategic uncertainty; that is, uncertainty concerning the actions and beliefs (and beliefs about the beliefs, and so on) of others faced by individual decision makers. As we show in a recent work, market maker/platform can exploit this strategic uncertainty to design profitable incentive programs by conditioning payoffs on some aggregate statistics (e.g., sales volume, mass of active drivers, average investment in security, etc.).

Strategic interactions and uncertainties are at the heart of many crowd-based platforms. Uncertainties can be at systemic level (e.g., strength of a threat/idea in a cyber-security/crowdfunding setting, average willingness-to-pay for a product, or the average reserved price of drivers in a ride-sharing platform) or can be idiosyncratic, explaining the variations at the microscopic level (e.g., differences in people's perception of threats/ideas, or heterogeneity in tastes of consumers for a product or in reserved prices of drivers in a ride-sharing platform). Such uncertainties along with the interdependencies in utilities lead to strategic uncertainty; that is, uncertainty concerning the actions and beliefs (and beliefs about the beliefs, and so on) of others faced by individual decision makers. As we show in a recent work, market maker/platform can exploit this strategic uncertainty to design profitable incentive programs by conditioning payoffs on some aggregate statistics (e.g., sales volume, mass of active drivers, average investment in security, etc.).

As an illustrative application of this idea, we introduce what we call sales-based rebate mechanisms as an effective tool to implement price discrimination across a population of buyers with correlated heterogeneous valuations on indivisible goods and services. In order to implement such sales-based rebate mechanisms, the seller charges each buyer a fixed price at the time of purchase contingent on a rebate that is a function of the ex post sales volume to be realized at the end of the sales period. The seller declares both a price and a menu of rebates as a function of sales. We show that, when there is a common component of uncertainty in consumers’ valuations (to which we refer as the quality of the product), such rebates enable a seller to effectively induce different expected net prices at different valuations. Importantly, this effective price discrimination over valuations is achieved keeping both the base price and the rebate uniform across all buyers. This uniformity of price and rebate across buyers is a key advantage of our proposed rebate mechanism, thereby providing a new mechanism for price discrimination in crowd-based markets. We use tools and techniques from game theory and variational optimization to provide insight into the economics of such mechanisms. In particular, we identify two mechanisms that are monotone functions of the sales volume that are easy to implement in practice and perform well when compared with the much more complex optimal mechanism. We provide a rigorous analysis of the optimal mechanism and discuss practical limitations in implementing the globally optimal design, further demonstrating the efficacy of our proposed monotone mechanisms.

Another potential application for this idea is promotion design in ride-sharing platforms, where uncertainty in the availability of drivers can harm the profit of the platform as it may result in too many or too few providers available for service. Platform can use promotions, such as guaranteed hourly wages promotion by Uber, as a means to influence the decision making of drivers and to cope with the uncertainty in the pool of active drivers.

Another potential application for this idea is promotion design in ride-sharing platforms, where uncertainty in the availability of drivers can harm the profit of the platform as it may result in too many or too few providers available for service. Platform can use promotions, such as guaranteed hourly wages promotion by Uber, as a means to influence the decision making of drivers and to cope with the uncertainty in the pool of active drivers.

- Sales-Based rebate design, with A. Jadbabaie, Management Science, vol. 69, pp. 5983–6000, 2023.

- A game theoretic approach to promotion design in two-sided platforms, with A. Jadbabaie, in Proceedings of the 55th Annual Allerton Conference on Communication, Control, and Computing, 2017 [slides].

A Theory of (Mis-)Information Spread on Social Networks

“A lie can travel halfway around the world while the truth is still putting on its shoes.”

There is a large body of evidence suggesting that false news and misinformation are more likely to circulate in social networks, spreading faster, deeper, and more widely than accurate information. Recent empirical studies suggest the novelty in the false news as a potential cause for the wider spread of misinformation. As part of a recent research initiative, we aim to complement such empirical findings by providing a theory that rationalizes the decision of individuals in the spread of news and how this could potentially trigger a wider spread of false or inaccurate information.

One potential rationale for our tendency to share novel news lies in our desire to steer others and have them conform to our opinions. If we find a news quite novel and surprising that significantly affects our opinion, the desire to have others think similarly incentivizes us to influence their opinions as well by sharing the news with them. Sharing news can also have affirmative motives: We may have different/opposing opinions from our friends and share news as an affirmation of our point of view, assuming the credibility of the news source as common knowledge.

“A lie can travel halfway around the world while the truth is still putting on its shoes.”

There is a large body of evidence suggesting that false news and misinformation are more likely to circulate in social networks, spreading faster, deeper, and more widely than accurate information. Recent empirical studies suggest the novelty in the false news as a potential cause for the wider spread of misinformation. As part of a recent research initiative, we aim to complement such empirical findings by providing a theory that rationalizes the decision of individuals in the spread of news and how this could potentially trigger a wider spread of false or inaccurate information.

One potential rationale for our tendency to share novel news lies in our desire to steer others and have them conform to our opinions. If we find a news quite novel and surprising that significantly affects our opinion, the desire to have others think similarly incentivizes us to influence their opinions as well by sharing the news with them. Sharing news can also have affirmative motives: We may have different/opposing opinions from our friends and share news as an affirmation of our point of view, assuming the credibility of the news source as common knowledge.

In a recent work on modeling online news dissemination on a Twitter-like social network, we show how novelty and affirmation motives naturally emerge from the utility-maximizing behavior of the agents when persuasion is the main motive for sharing. We further characterize the dynamics of the news spread endogenously generated by micro-level news-sharing decisions and establish necessary and sufficient conditions for emergence of a cascade. This enables us to qualitatively study the relation between the news precision level and the likelihood of a news-sharing cascade. In particular, our model reveals a connection between wisdom of the crowd and cascade likelihood when sharing news is costly; When the society as a whole is biased, i.e., there is a gap between the true state of interest and the aggregation of prior beliefs, the truth almost always triggers a cascade. In contrast, in a wise or unbiased society, cascades are more likely to occur for imprecise news (which are hence more likely to be false).

- A game-theoretic model of misinformation spread on social networks, with C. Hsu and A. Jadbabaie, Games and Economic Behavior, under review.

- Persuasion, news sharing, and cascades on social networks, with C. Hsu and A. Jadbabaie, preprint.

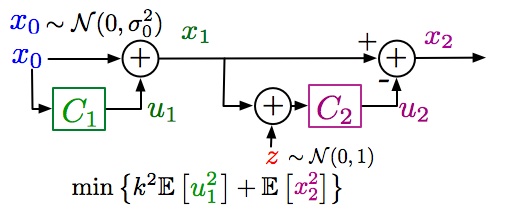

The original Witsenhausen's counterexample

The original Witsenhausen's counterexample

Local Optimality of Almost Piecewise-Linear Quantizers for Witsenhausen's Counterexample

In his seminal work, Witsenhausen (1968) formulated the first counterexample to the misconception that nothing is to be gained by using nonlinear controllers when loss is quadratic and system is linear subject to Gaussian noise. His counterexample is in form of a simple two-stage Linear-Quadratic-Gaussian (LQG) decentralized control system, where he showed that the optimal controller is nonlinear. Finding the optimal solution, however, is still an open problem.

While Witsenhausen's counterexample has been subject of intense research across multiple communities for half a century, a little is known about the topological properties of its optimal solution (e.g., whether it is continuous or not, the number of its fixed points, etc.). One notable result in this line is that the optimal controller is a strictly increasing function with a real analytic left inverse. Consequently, no piecewise-linear strategy can be optimal, though not ruling out the possibility of a discontinuous, and in particular a ``near piecewise-linear'' optimal solution; a conjecture supported by numerous simulations and heuristic approaches.

In a recent work, we formulate Witsenhausen's counterexample in a leader-follower game setup where the follower makes noisy observations from the leader's action and aims to choose her action as close as possible to that of the leader. Leader who moves first and can see the realization of the state, chooses her action to minimize her ex-ante distance from the follower's action as well as the state. We show the existence of nonlinear perfect Bayesian equilibria at which the leader's strategy is a perturbed slopey variation of an optimal MSE quantizer. We then prove that these equilibria are indeed local minima of the original Witsenhausen's setup. Incorporating some relevant results from asymptotic quantization theory, we show that the proposed local minima are near-optimal in that they are at most a constant factor away from the optimal one, in a regime where the ratio to the optimal cost for the optimal linear design tends to infinity. Our work hence provides a supporting theory for the local optimality of near piecewise-linear strategies for Witsenhausen's problem. we further conjecture that the globally optimal solution is indeed among our proposed local minima, which we are trying to prove incorporating a diverse set of ideas from entropy methods in mathematics, optimal transport, and scale-space theory.

In his seminal work, Witsenhausen (1968) formulated the first counterexample to the misconception that nothing is to be gained by using nonlinear controllers when loss is quadratic and system is linear subject to Gaussian noise. His counterexample is in form of a simple two-stage Linear-Quadratic-Gaussian (LQG) decentralized control system, where he showed that the optimal controller is nonlinear. Finding the optimal solution, however, is still an open problem.

While Witsenhausen's counterexample has been subject of intense research across multiple communities for half a century, a little is known about the topological properties of its optimal solution (e.g., whether it is continuous or not, the number of its fixed points, etc.). One notable result in this line is that the optimal controller is a strictly increasing function with a real analytic left inverse. Consequently, no piecewise-linear strategy can be optimal, though not ruling out the possibility of a discontinuous, and in particular a ``near piecewise-linear'' optimal solution; a conjecture supported by numerous simulations and heuristic approaches.

In a recent work, we formulate Witsenhausen's counterexample in a leader-follower game setup where the follower makes noisy observations from the leader's action and aims to choose her action as close as possible to that of the leader. Leader who moves first and can see the realization of the state, chooses her action to minimize her ex-ante distance from the follower's action as well as the state. We show the existence of nonlinear perfect Bayesian equilibria at which the leader's strategy is a perturbed slopey variation of an optimal MSE quantizer. We then prove that these equilibria are indeed local minima of the original Witsenhausen's setup. Incorporating some relevant results from asymptotic quantization theory, we show that the proposed local minima are near-optimal in that they are at most a constant factor away from the optimal one, in a regime where the ratio to the optimal cost for the optimal linear design tends to infinity. Our work hence provides a supporting theory for the local optimality of near piecewise-linear strategies for Witsenhausen's problem. we further conjecture that the globally optimal solution is indeed among our proposed local minima, which we are trying to prove incorporating a diverse set of ideas from entropy methods in mathematics, optimal transport, and scale-space theory.

- Local optimality of almost piecewise-linear quantizers for Witsenhausen's counterexample, with A. Jadbabaie, IEEE Transactions on Automatic Control, vol. 66, pp. 3152-3168, 2020.

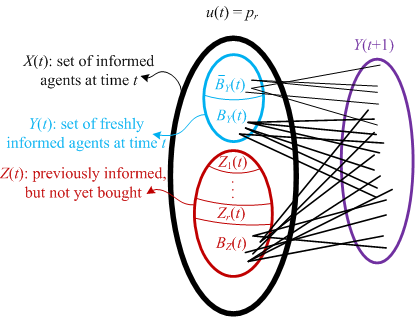

An overall view of the information diffusion via word of mouth.

An overall view of the information diffusion via word of mouth.

Dynamic Pricing in Networks with Price-Driven Information Spreading Processes

The inherent complexity of spread on networks (e.g., spread of information, innovation, diseases and failures) induces a new dimension to dynamic decision making in networks; while choosing a strategy maximizing his utility, a decision maker should also take into account the effect of his strategy on the underlying dynamic spread process. A good example is the advertising strategies of small businesses such as smartphone app developers, where the word of mouth of users is often the only low-budget means of advertisement; the seller should thus use the price both to control the profit and to spread the information about the product in the network. To characterize the optimal dynamic pricing strategy in such settings, we have developed an analytically tractable, yet rich framework capturing the effect of dynamic prices on the extent of information diffusion via word of mouth communication of consumers.

Given the spread versus exploit nature of the problem that the seller faces in each period, it is quite natural to expect fluctuations in the optimal pricing policy. What makes our results novel is the optimality of frequent zero-price sales. More precisely, we show that the optimal dynamic pricing policy for durable products with zero or negligible marginal cost drops the price to zero infinitely often. This finding matches our observation from smartphone app industry, where price histories exhibit frequent zero-price sales. The key novel insight here is that zero-price sales allow the seller to reach potentially profitable but uninformed parts of the network via word of mouth of low-valuation zero-price buyers. By properly timing the drop in prices, the firm can ensure that the marginal gain in future profit by selling the product in these previously-unexplored parts of the network dominates the loss in the immediate profit caused by offering the product for free, making the drop of the price to zero a profitable course of action. The results remain valid in face of forward-looking agents, homophily-based engagement of friends in word of mouth, and network externalities under surprisingly mild assumptions.

The inherent complexity of spread on networks (e.g., spread of information, innovation, diseases and failures) induces a new dimension to dynamic decision making in networks; while choosing a strategy maximizing his utility, a decision maker should also take into account the effect of his strategy on the underlying dynamic spread process. A good example is the advertising strategies of small businesses such as smartphone app developers, where the word of mouth of users is often the only low-budget means of advertisement; the seller should thus use the price both to control the profit and to spread the information about the product in the network. To characterize the optimal dynamic pricing strategy in such settings, we have developed an analytically tractable, yet rich framework capturing the effect of dynamic prices on the extent of information diffusion via word of mouth communication of consumers.

Given the spread versus exploit nature of the problem that the seller faces in each period, it is quite natural to expect fluctuations in the optimal pricing policy. What makes our results novel is the optimality of frequent zero-price sales. More precisely, we show that the optimal dynamic pricing policy for durable products with zero or negligible marginal cost drops the price to zero infinitely often. This finding matches our observation from smartphone app industry, where price histories exhibit frequent zero-price sales. The key novel insight here is that zero-price sales allow the seller to reach potentially profitable but uninformed parts of the network via word of mouth of low-valuation zero-price buyers. By properly timing the drop in prices, the firm can ensure that the marginal gain in future profit by selling the product in these previously-unexplored parts of the network dominates the loss in the immediate profit caused by offering the product for free, making the drop of the price to zero a profitable course of action. The results remain valid in face of forward-looking agents, homophily-based engagement of friends in word of mouth, and network externalities under surprisingly mild assumptions.

- Dynamic pricing in social networks: The word of mouth effect, with A. Jadbabaie and A. Kakhbod, Management Science, vol. 64, pp. 971-979, 2018 [full version].

There is a phase transition in the quality of information aggregation between these two network structures

There is a phase transition in the quality of information aggregation between these two network structures

Dynamic Public Learning in Networks of Strategic Agents

Recent advances in information technology and Internet have provided a wide range of channels to aggregate information, from blogs to wikis to the open source movement to prediction markets. There are abundant sources of information (e.g., news channels, social networks, online forums, surveys, and data bases), with every person having access to a personalized set of the sources. The aggregate (or average) action in a population partially accumulates the information dispersed among them. A fundamental question is how the structure of interactions among individual decision makers affects the quality of the information aggregation. This question is extensively studied in the closely related literature of systemic risk and network resilience. Aggregate learning is a twin of systemic volatility; the less volatile the aggregate action of the whole system, the higher the quality of the learning. Mostly concerned with static settings, these works typically come up with a (sometimes contradicting) ranking of network structures in terms of their performance against uncertainties and disruptive events.

We revisit this problem in the context of learning from noisy observations of the public history in a dynamic setting, where the underlying state of the world affecting the utilities moves on a random walk. Our results reveal that the quality of aggregate learning is affected by different network characteristics which vary with the state dynamics. While the quality of learning for slow walks is mainly determined by the local structure of the graph, for fast dynamics the quality of learning is affected by the distribution of Bonacich centralities. This may result in a phase transition in quality of learning between different networks thus ruling out the possibility of ranking network structures in terms of their quality of information aggregation.

Recent advances in information technology and Internet have provided a wide range of channels to aggregate information, from blogs to wikis to the open source movement to prediction markets. There are abundant sources of information (e.g., news channels, social networks, online forums, surveys, and data bases), with every person having access to a personalized set of the sources. The aggregate (or average) action in a population partially accumulates the information dispersed among them. A fundamental question is how the structure of interactions among individual decision makers affects the quality of the information aggregation. This question is extensively studied in the closely related literature of systemic risk and network resilience. Aggregate learning is a twin of systemic volatility; the less volatile the aggregate action of the whole system, the higher the quality of the learning. Mostly concerned with static settings, these works typically come up with a (sometimes contradicting) ranking of network structures in terms of their performance against uncertainties and disruptive events.

We revisit this problem in the context of learning from noisy observations of the public history in a dynamic setting, where the underlying state of the world affecting the utilities moves on a random walk. Our results reveal that the quality of aggregate learning is affected by different network characteristics which vary with the state dynamics. While the quality of learning for slow walks is mainly determined by the local structure of the graph, for fast dynamics the quality of learning is affected by the distribution of Bonacich centralities. This may result in a phase transition in quality of learning between different networks thus ruling out the possibility of ranking network structures in terms of their quality of information aggregation.

- Dynamic public learning in networks of strategic agents: the role of inter/intra-community ties, with A. Jadbabaie and M. Dahleh, in Proceedings of the 56th IEEE Conference on Decision and Control, 2017 (invited paper).

Budget Allocation in Competitive Diffusion: Seeding vs. Quality

A main feature of product consumption in social networks is what is often called the network effect. For such products, consumption of each agent incentivizes the neighboring agents to consume more as well. There are diverse sets of examples for such products or services. New technologies and innovations, mobile applications, online games, social network platforms and online dating services are among many examples in which consuming from a common product or service is more preferable for people. Firms can exploit the network effect to incentivize a larger consumption of their product by seeding the key individuals in the network.

There is a rich literature on identifying the key player(s) in the network. What we do here is to ask a more fundamental question as how to allocate the budget between seeding agents and improving quality, the other key factor in product consumption. We consider two firms competing in a shared market, each having a limited budget which can be invested on the quality or spent on seeding key individuals in the network. We assume that consumers update their consumption as a myopic linear best response to the quality of the products and consumption of the neighbors. We characterize the unique Nash equilibrium of the game between firms and study the effect of the budgets as well as the network structure on the optimal allocation. In particular, we show that at the equilibrium firms invest more budget on quality when their budgets are close to each other. However, as the gap between budgets widens, competition in qualities becomes less effective and firms spend more of their budget on seeding.

A main feature of product consumption in social networks is what is often called the network effect. For such products, consumption of each agent incentivizes the neighboring agents to consume more as well. There are diverse sets of examples for such products or services. New technologies and innovations, mobile applications, online games, social network platforms and online dating services are among many examples in which consuming from a common product or service is more preferable for people. Firms can exploit the network effect to incentivize a larger consumption of their product by seeding the key individuals in the network.

There is a rich literature on identifying the key player(s) in the network. What we do here is to ask a more fundamental question as how to allocate the budget between seeding agents and improving quality, the other key factor in product consumption. We consider two firms competing in a shared market, each having a limited budget which can be invested on the quality or spent on seeding key individuals in the network. We assume that consumers update their consumption as a myopic linear best response to the quality of the products and consumption of the neighbors. We characterize the unique Nash equilibrium of the game between firms and study the effect of the budgets as well as the network structure on the optimal allocation. In particular, we show that at the equilibrium firms invest more budget on quality when their budgets are close to each other. However, as the gap between budgets widens, competition in qualities becomes less effective and firms spend more of their budget on seeding.

- Competitive diffusion in social networks: Quality or seeding?, with A. Fazeli and A. Jadbabaie, IEEE Transactions on Control of Network Systems, vol. 2, pp. 665- 675, 2017.