|

I am a Research Scientist at Google DeepMind. I recently graduated from MIT CSAIL, advised by Leslie Kaelbling and Tomas Lozano-Perez, and Josh Tenenbaum. Research: I aim to better understand and improve machine learning generalizability. To accomplish this, I leverage techniques from meta-learning, learning to search, program synthesis, and insights from mathematics and the physical sciences. I enjoy building collaborations to work across the entire theory-application spectrum. Twitter / Email / CV / Google Scholar / LinkedIn |

|

|

|

|

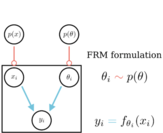

Ferran Alet , Clement Gehring, Tomás Lozano-Pérez, Joshua B. Tenenbaum, Leslie Pack Kaelbling under review 2022 We suggest there is a contradiction in how we model noise in ML and derive a framework for losses in function space. |

|

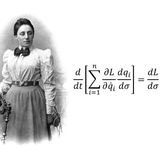

Ferran Alet* , Dylan Doblar*, Allan Zhou, Joshua B. Tenenbaum, Kenji Kawaguchi, Chelsea Finn NeurIPS 2021 We propose to encode symmetries as conservation tailoring losses and meta-learn them from raw inputs in sequential prediction problems. website, code, interview(10k views) |

|

Ferran Alet , Maria Bauza, Kenji Kawaguchi, Nurullah Giray Kuru, Tomás Lozano-Pérez, Leslie Pack Kaelbling, NeurIPS 2021; Workshop version was a Spotlight at the physical inductive biases workshop We optimize unsupervised losses for the current input. By optimizing where we act, we bypass generalization gaps and can impose a wide variety of inductive biases. 15-minute talk |

|

Ferran Alet* , Javier Lopez-Contreras*, James Koppel, Maxwell Nye, Armando Solar-Lezama, Tomás Lozano-Pérez, Leslie Pack Kaelbling, Joshua B. Tenenbaum ICML 2021 website We generate a large quantity of diverse real programs by running code instruction-by-instruction and obtain I/O pairs for 200k subprograms. |

|

Ferran Alet* , Martin Schneider*, Tomás Lozano-Pérez, Leslie Pack Kaelbling ICLR 2020 code, press By meta-learning programs instead of neural network weights, we can increase meta-learning generalization. We discover new algorithms in simple environments that generalize to complex ones. |

|

Ferran Alet , Erica Weng, Tomás Lozano-Pérez, Leslie Pack Kaelbling NeurIPS, 2019 code We frame neural relational inference as a case of modular meta-learning and speed up the original modular meta-learning algorithms by two orders of magnitude, making them practical. |

|

Maria Bauza, Ferran Alet Yen-Chen Lin, Tomás Lozano-Pérez, Leslie Pack Kaelbling, Phillip Isola, Alberto Rodriguez IROS, 2019 project website / code / data / press Diverse dataset of 250 objects pushed 250 times each, all with RGB-D video. First probabilistic meta-learning benchmark. |

|

Ferran Alet , Adarsh K. Jeewajee, Maria Bauza, Alberto Rodriguez, Tomás Lozano-Pérez, Leslie Pack Kaelbling ICML, 2019 (Long talk) talk/ code We learn to map functions to functions by combining graph networks and attention to build computational meshes and show this new framework can solve very diverse problems. |

|

Ferran Alet , Tomás Lozano-Pérez, Leslie Pack Kaelbling CoRL, 2018 video/ code We propose to do meta-learning by training a set of neural networks to be composable, adapting to new tasks by composing modules in novel ways, similar to how we compose known words to express novel ideas. |

|

Ferran Alet , Rohan Chitnis, Tomás Lozano-Pérez, Leslie Pack Kaelbling IJCAI, 2018 video/ People often find entities by clustering; we suggest that, instead, entities can be described as dense regions and propose a very simple algorithm for detecting them, with provable guarantees. |

|

Andy Zeng et al. ICRA, 2018 (Best Systems Paper Award by Amazon Robotics) talk/ project website Description of the system for the Amazon Robotics Challenge 2017 competition, in which we won the stowing task. |

|

|

|

|

|

|