KeyForge & TimeForge: Fixing Email Deniability

I’m happy to say that our paper, KeyForge: Mitigating Email Breaches with Forward-Forgeable Signatures, was accepted to Usenix Security 2021!

Take a quick moment to think about every email you’ve ever sent. Perhaps there are a few that, in hindsight, are regrettable; Ideas that didn’t pan out, hurtful words or embarrassing thoughts that you’d prefer remain confidential.

Now, what if those messages were leaked? Perhaps, as an act of revenge, someone with access to your account took a few choice messages and shared them.

An email thief would be inherently untrustworthy, so the receiver could think that these messages were made up or modified, right?

Unfortunately, your emails aren’t really deniable – usually, when you send an email, any recipient (or thief) of that message can cryptographically prove to anyone that the message is valid and likely from you!

I’ve seen a number of reactions to this fact, including:

- Why do you care if email is deniable?

- Why isn’t email already deniable?

- Dude, we have tools for this, why can’t you just use OTR?

Yeah, I hear you. It turns out this is a hard, important, and somewhat complicated problem, one that I’ve worked on with my co-authors for almost a year.

For an applied cryptographer, studying email might seem somewhat odd. Admittedly, my other research projects look more important, or at least more flashy. And, while others have moved on to other messaging platforms, here I am fiddling with email.

The problem is, people still use email – it’s arguably the largest, most ubiquitous messaging service on the planet. Yet, it’s really messy and flawed, and a confluence of poor design decisions are causing real harm to real people.

In this blogpost I’ll give a quick explainer on the problem of email deniability, why it’s important, and our proposed solutions: KeyForge & Timeforge.

WikiLeaks & Email: A Retrospective on 2016

Email’s lack of deniability helped Russian intelligence muck with the 2016 election. I know, hear me out.

As a quick reminder, email was at the center of contention during the 2016 election, beyond the obvious “but her emails” snafu. Russian intelligence broke into both the Democratic National Committee’s email servers, and the Clinton campaign chairman’s email account, stealing thousands of personal and business messages. Both troves would later wind up in the hands of WikiLeaks, who then published both stores in easy-to-peruse online databases (Here’s the DNC’s and the campaign chairman Podesta’s).

At the time, publishing these emails was a huge risk to WikiLeaks, and incredibly perplexing from a strategic standpoint. Normally, WikiLeaks deals with whistleblowers handing over somewhat easily corroborated documents – hard-to-recreate code or other documents from those that can prove they’re from the organization they’re whistleblowing upon.

Here, WikiLeaks’ database came from a foreign intelligence agency deliberately attempting to muck with the election, one that had both opportunity and incentive to alter these messages to boost their salaciousness. Worse, WikiLeaks can only remain relevant as long as their releases continue to appear genuine; if any messages were proven to be false, it would have significantly harmed Wikileaks’s reputation as an information broker.

So, why did Wikileaks decide to risk publishing these messages despite their dubious sourcing? The short answer is that email is valuable because it’s both personal and because it’s not deniable.

Why Email isn’t Deniable: DKIM

Email is protected in-part via a protocol is called DomainKeys Identified Mail (DKIM), an IETF spec. DKIM, unfortunately, kills email deniability.

DKIM works like this: Whenever an email is sent from, say, Gmail.com, to MIT.edu, Gmail’s server cryptographically signs the message, and adds this signature into the email’s metadata (called “headers”). When the email is received by MIT, MIT’s servers look up Gmail’s public key via the DNS, and then use that public key to verify the message signature.

The problem with DKIM is that these signatures never expire, so the messages are verifiable in perpetuity.

So, if you were to receive a database of correctly-signed emails, there are only three possible explanations:

- All of the emailing participants accounts have been hacked;

- Google and Microsoft’s private keys were stolen; or

- this is a real leak.

Given the number of participants and diversity of email servers, Occam’s razor would indicate that the messages are likely real.

Getting back to the case of the DNC and Podesta, all WikiLeaks had to do to verify the Russian dump was to verify DKIM signatures using public keys from Google, Microsoft, and others. In fact, they can do so credibly, because anyone who wanted to do their own verification could easily do the math themselves and corroborate that the messages were correctly signed.

Don’t take my word for it, WikiLeaks wrote a fancy blog post about the subject, and below is the banner that you’ll see if you look up a verified email in their stolen email database:

Now, you might not be motivated by helping out Mr. Podesta or the DNC in particular, but it’s important to keep in mind that this is all just an illustration of a larger problem – the rest of us all use email too. Other vulnerable groups like activists and whistleblowers also need to be able to communicate, and deniable messaging lowers the incentive for malicious insiders to sell such messages en masse.

How did we get here?

“…One might say a DKIM signature is in a similar moral space as the “super cookies” that some providers put into web connections (like Verizon, who the FTC fined $1.3M). It is a tracking thing that is put without the user’s consent into their email that can be used for identification later without their knowledge. Worse, it’s not merely a cookie that’s a constant, it’s a content-specific digital signature. Shock horror.” —Jon Callas, one of the original authors of the DKIM RFC

At this point, one might be (rightfully) annoyed that DKIM exists. Most users don’t know that their emails are publicly verifiable, and might assume that they can plausibly deny a message if it fell into the wrong hands. Deniability is a basic property of most modern messaging systems, and it is truly bizarre that an unencrypted medium like email is somehow also cryptographically signed.

Unfortunately, DKIM serves a really useful purpose — it prevents your email server from accepting spam and messages that lie about where they come from. It would be terrible, for instance, if Gmail couldn’t verify if a message from your bank was actually valid. That’s why DKIM is a thing.

So, why don’t normal deniable key exchange protocols work?

The short answer is that email is an incredibly weird ecosystem with a number of odd caveats that wind up making interactivity between the sending and receiving servers difficult.

Email is asynchronous and often one-directional; a server may send an email and never get a response from the destination server. The email ecosystem happily encourages “multihop” / store-and-forward scenarios where messages travel through untrusted third parties until it finally hits your receiving server. This behavior appears to happen quite often – a quick scan of the DNS records for the top 150k websites shows that roughly 22% of email servers send their messages through some sort of third-party email system.

This, by the way, includes MIT:

In the above, we see that the DNS MX record for MIT.edu (which is how a mail server knows where to send mail for a domain) points to protection.outlook.com, a Microsoft owned domain / server.

So, not only does Google and MIT have signed copies of all of my emails, so does Microsoft. And, worse, there’s really no way the average user would understand any of this.

Unfortunately, these third parties actually serve a useful purpose – malware detection and load balancing. Smaller organizations don’t want to worry about handling DDOS attacks, or incoming malware scans, so they rationally outsource this to third parties.

Worse, people often use third-party systems that pretend to be end-user clients instead of email servers to forward messages. For example, when you use Gmail to send messages from your school’s email account, Gmail is acting the same way a desktop email client would, but there’s no way that the sending server can know that Gmail still needs to verify the signatures.

If the endpoints were known, and latency / asynchronicity weren’t such an overriding concern, any deniable protocol like Off The Record (OTR) would be sufficient.

The high latency of email communication makes using our “off-the-record” protocol impractical in the setting of email. —Off-the-Record Communication, or, Why Not To Use PGP

Other solutions have been proposed in the past, like leveraging ring signatures,

Another solution would be to rotate DKIM keys often, and publicly expose the private key at a fixed time. While this would work, there are a ton of practical concerns that make this difficult. E.g.:

- Updating the DNS is not organizationally easy, and it takes time and effort for such records to get to where they’re supposed to go, especially in the face of caching and other optimizations.

- Manual key rotation requires staff to perform the action, which increases costs and the probability of misconfiguration, key theft, and loss of service.

- Administrators would need to keep old, expired secret keys around and available indefinitely to continue being provably deniable.

Our solutions: KeyForge and TimeForge

Due to operational concerns, any replacement for DKIM must have the following properties:

- We can only modify the signing and verification parts of the protocol

- Signatures must be verifiable for only a short time, e.g. 15 minutes

- Long-lived public keys, same as DKIM now

- It must be non-interactive – the signer should not need to know anything about the receiver, and the receiver should not need to connect back to the signer to verify

- Quick signing & verification, and low bandwidth requirements per email, preferably equivalent to DKIM’s standard 2048-bit RSA

- If there exists some additional metadata to make the signatures forgable, it must be succinct – small and easily distributed – e.g. as an additional part of the email header

We couldn’t find a cryptographic definition that fell into our use-case, so we created a new one called a “Forward Forgeable Signature” (FFS). An FFS is a signature scheme whose validity “expires” after a certain timespan — the signature itself is forgeable and useless after that time lapses — but whose public key remains viable for other unexpired signatures.

Our paper introduces two FFS constructions, called KeyForge and TimeForge respectively, each representing different tactics of achieving the same set of goals.

KeyForge

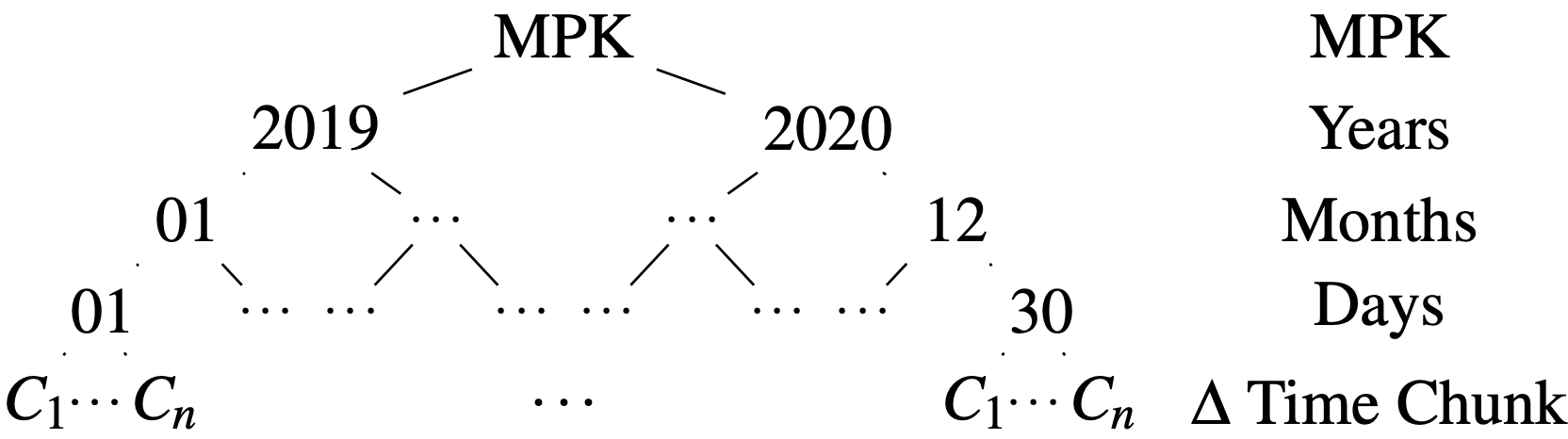

In a nutshell, KeyForge works by divulging private keys over time from a special hierarchical signature scheme (a modified Gentry-Silverberg bilinear-map based HIBE scheme).

At any given time, KeyForge can remain succinct because it generates a neat tree of public/private keypairs where exposing a node’s private key also results in also exposing that node’s children’s keys, but not it’s parents. If you then tag each layer of the tree as a Master/Year/Month/Day/15-minute “chunk”, you get something like this:

So, for this KeyForge layout, one can reveal the private key for, say, December 2020, and it’ll result in all signatures generated from that entire month being forgable. In reality, we’ll get further succinctness by keeping an equal branching factor at each layer, but this structure is easier to explain.

The cool thing here is that KeyForge is fast and the signatures are actually surprisingly small. In fact, the signatures are smaller than DKIM’s current standard, RSA2048. As a result, a server could even attach expired private keys to outgoing emails, just to be sure that old messages are forgable.

TimeForge

The biggest problem with KeyForge is the need for the sending server to reveal and distribute expired keys at all. TimeForge solves this problem by providing forgaibility in a slightly different setting.

TimeForge works by assuming there is some trusted entity out there that signs timestamps every so often (like NIST’s Randomness Beacon), we call this a Publicly Verifiable Time Keeper (PVTK). TimeForge then uses a special signature that is forgable if a signed timestamp from the PVTK exists that is greater than some timeout — Specifically, we create a Witness Indistinguishable Proof of Knowledge (WIPOK) of the following statement: I have a range proof on a signed timestamp greater than $n$, OR this message was signed by us.

Slightly more formally, the idea is to substitute each signature on a message $m$ at time $t$ with a succinct zero-knowledge proof of the statement $S(m)\vee T(t+\Delta)$, where: $S(m)$ denotes knowledge of a valid signature by the sender on $m$ and $T(t+\Delta)$ denotes knowledge of a valid timestamp for a time later than $t+\Delta$.

I won’t go into further details about how KeyForge and TimeForge work here, but you can read about them in the full paper. We concluded that KeyForge was likely practical today (especially since the primitives are well understood), but that TimeForge required further trickery and speed improvements to the underlying primitives to be efficient enough for both space and time.

For my fellow crypto nerds: we initially tried the more obvious/exotic tools for this, zkSNARKS and BulletProofs, but found that these didn’t work well for us in practice due to large signature sizes (for BulletProofs) and the cost of embedding the signature verification circuit (zkSNARKS), and wound up using a combination of Schnorr-style proofs, Boneh-Boyen signatures, and a range proof. Since we began our work, libraries like libSpartan were developed, so it’s likely that Timeforge can be made much faster and much more practical with some further creativity & effort.

Some takeaways

“This document explains why the IAB [Internet Architecture Board] believes that, when there is a conflict between the interests of end users of the Internet and other parties, IETF decisions should favor end users.” —IETF RFC 8890: The Internet is for End Users

This year, the IETF adopted RFC 8890 which stated, point-blank, that “The Internet is for End Users.” The document houses a normative statement: In design of new protocols, we must put the average user ahead of company, government, or other needs. I applaud the IETF’s aspirations, but we need to do more.

First, we need to begin fixing old standards. New cryptography and new attacks have come to light since many of the protocols we rely on have been implemented, and the natural tendency of tech folks like myself is to create new standards and protocols before fixing the old; Signal or the IETF’s own Messaging Layer Security MLS,

Second, while RFC8890 is a fantastic values statement – that standards authors should put the needs of the user first – it would be a remarkably bad idea to use this as a justification for ignoring incentives altogether. This research serves as a worked example of how a narrow binary definition of “secure” vs “not secure” is itself a failure mode of security design; By accepting that there is an incentive model around attackers, we can increase costs, decrease the benefits of malfeasance, and create better designs to protect users.

– Mike Specter Is a final-year PhD candidate in computer science at MIT, click on my name to learn more.