Li Ding | 丁立

I'm a 4th-year Ph.D. candidate at UMass Amherst CICS, advised by Lee Spector. I work closely with Scott Niekum (UMass), Joel Lehman (StabilityAI), Jeff Clune (UBC, DeepMind), and also spent time at Google Research and Meta.

Before Ph.D., I was a full-time research engineer at MIT with Lex Fridman and Bryan Reimer, and concurrently a graduate student at MIT CSAIL. I did my master's at Univ. of Rochester with Chenliang Xu.

liding@{umass.edu, mit.edu}

11/2023 - I'm on the lookout for job opportunities in both the industry and academia, starting 09/2024. If you feel that my background is a good fit for your organization, let's chat!

Research

My research focus is efficient learning algorithms for large AI models, focusing on:

- Alignment: preference-based learning, RLHF, and aligning models with human interests.

- Open-Endedness: generative models and adaptive agents for diversity-driven tasks and/or in open-ended environments.

- Interdiscipline - applications in quantum computing and human-computer interaction.

Before Ph.D., I worked on deep learning for autonomous driving, human behavior modeling, and action recognition.

Publications

Representative papers are highlighted.

Quality Diversity through Human Feedback

Li Ding,

Jenny Zhang

,

Jeff Clune

,

Lee Spector

,

Joel Lehman

NeurIPS 2023: Agent Learning in Open-Endedness (ALOE) Workshop (Spotlight)

[project page]

[arXiv]

[code]

[tutorial]

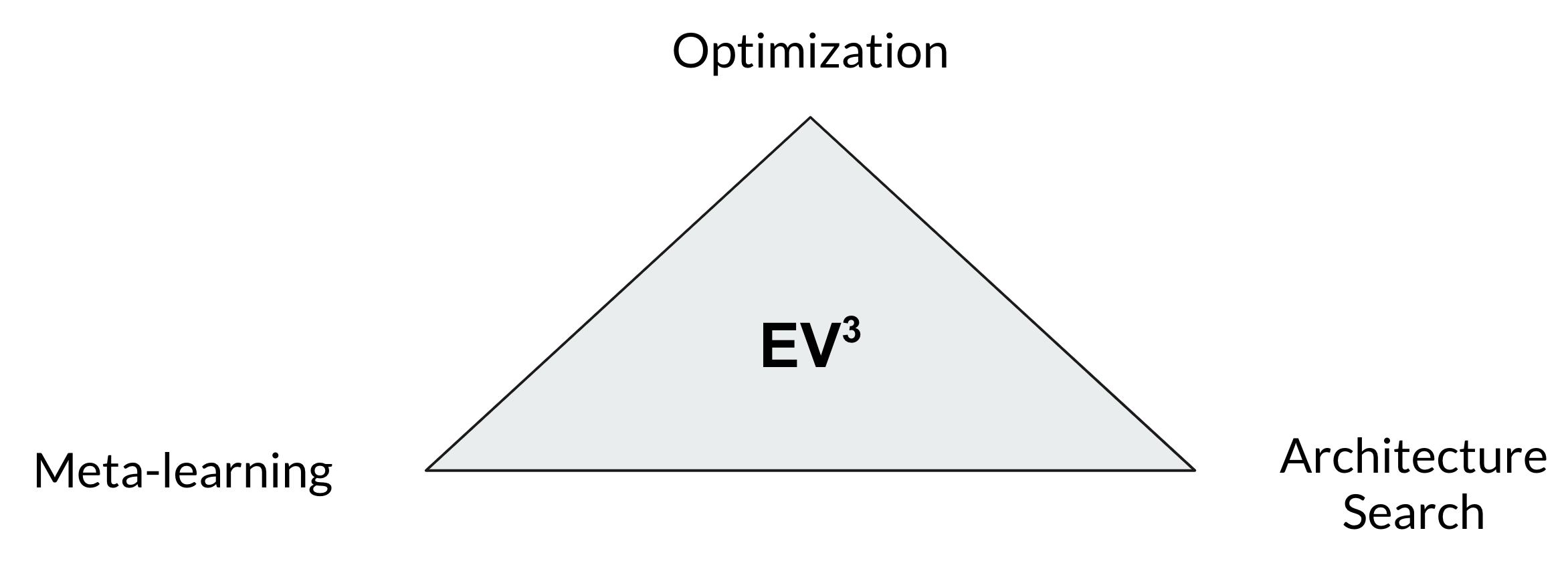

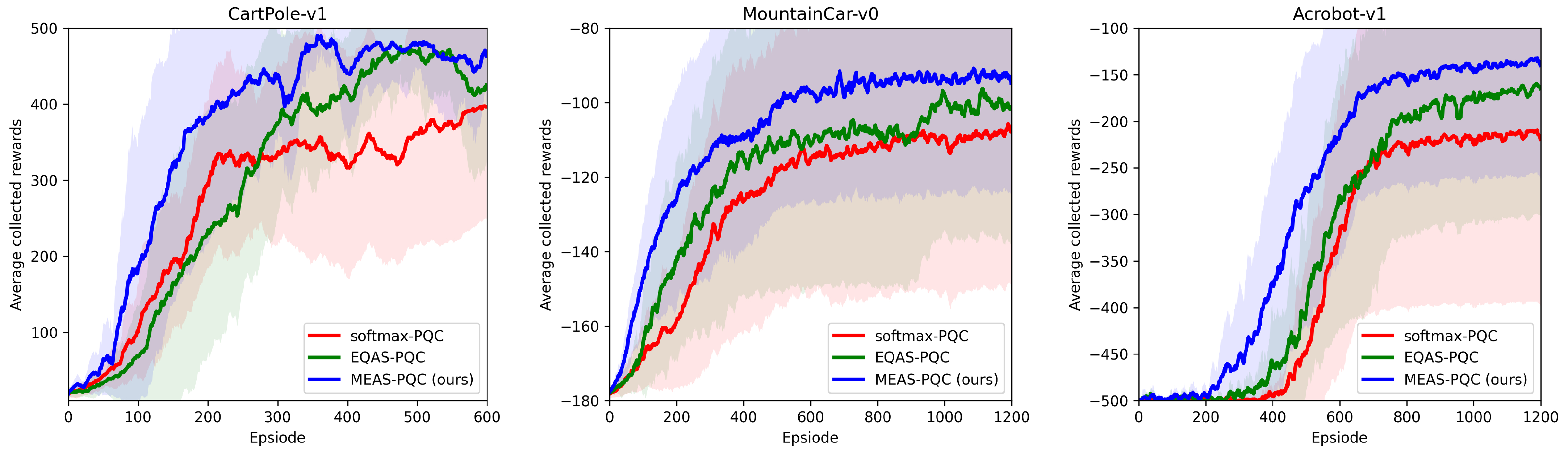

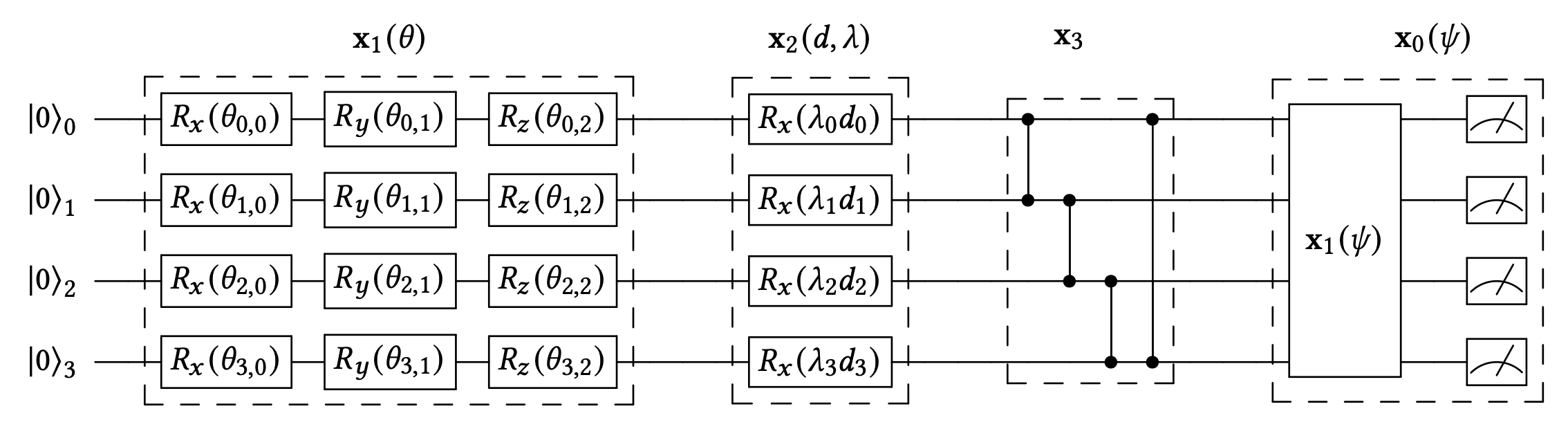

Multi-Objective Evolutionary Architecture Search for Parameterized Quantum Circuits

Li Ding, Lee Spector

Entropy (Special Issue: Quantum Machine Learning), 2023

[paper]

Objectives Are All You Need: Solving Deceptive Problems Without Explicit Diversity Maintenance

Ryan Boldi, Li Ding, Lee Spector

NeurIPS 2023: Agent Learning in Open-Endedness Workshop

[arXiv]

Particularity

Lee Spector, Li Ding, Ryan Boldi

Genetic Programming Theory and Practice XX, 2023

[arXiv]

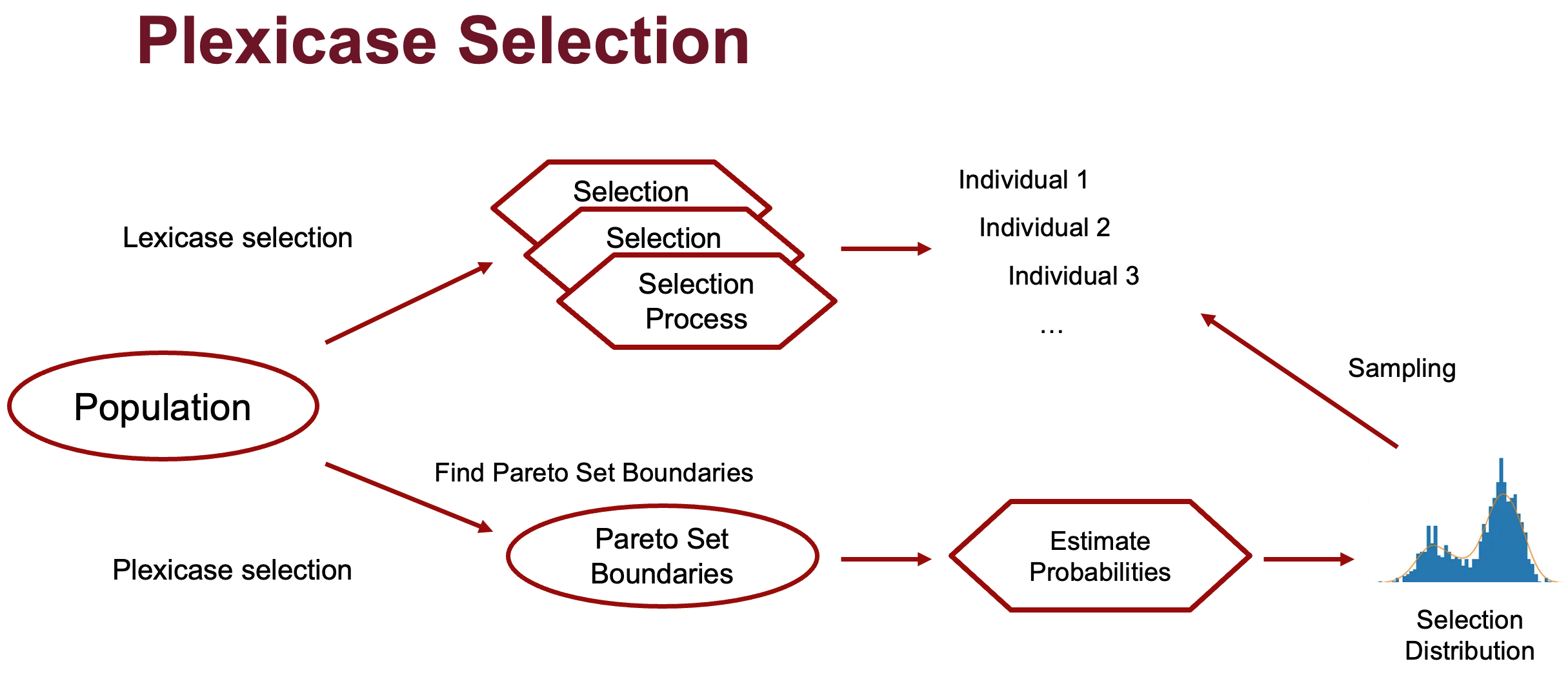

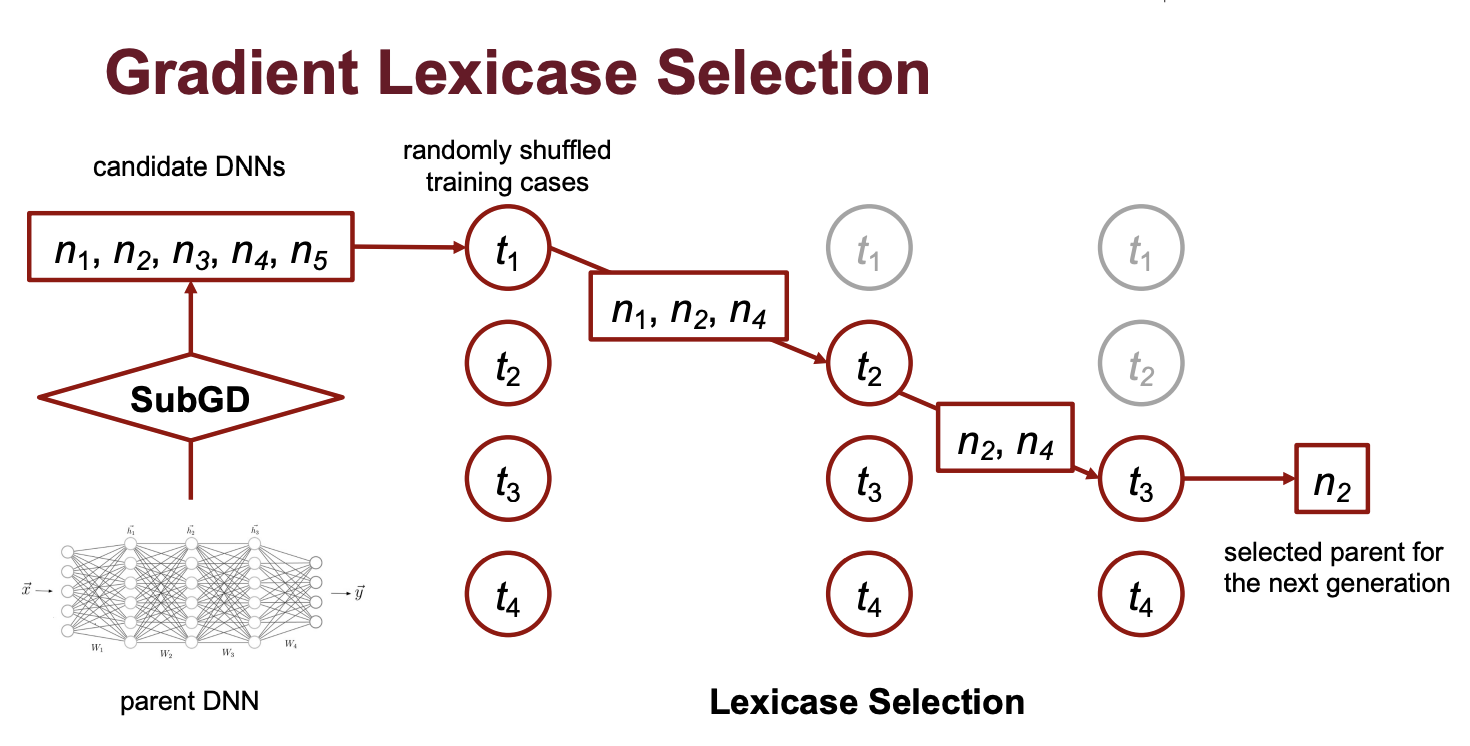

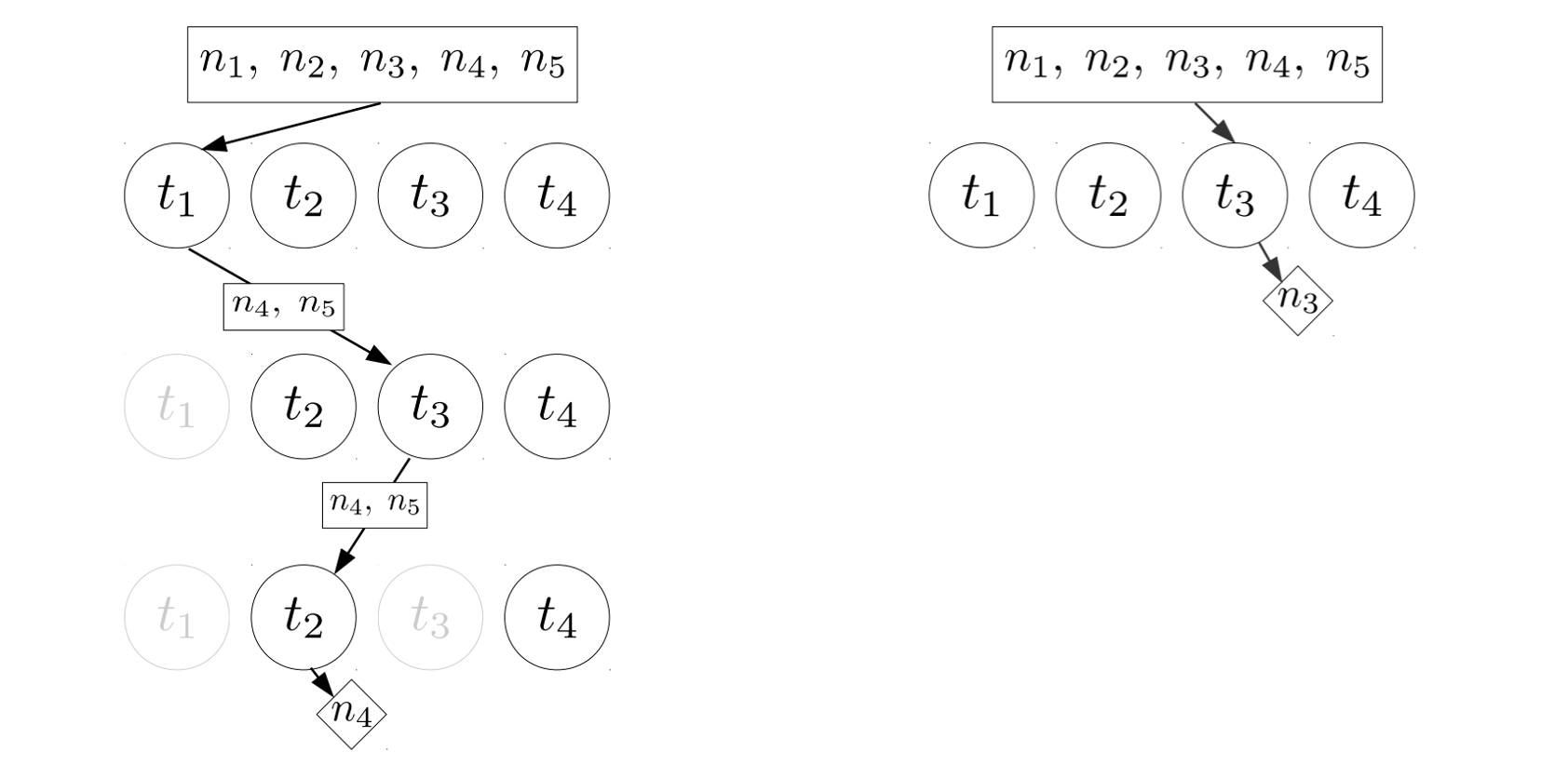

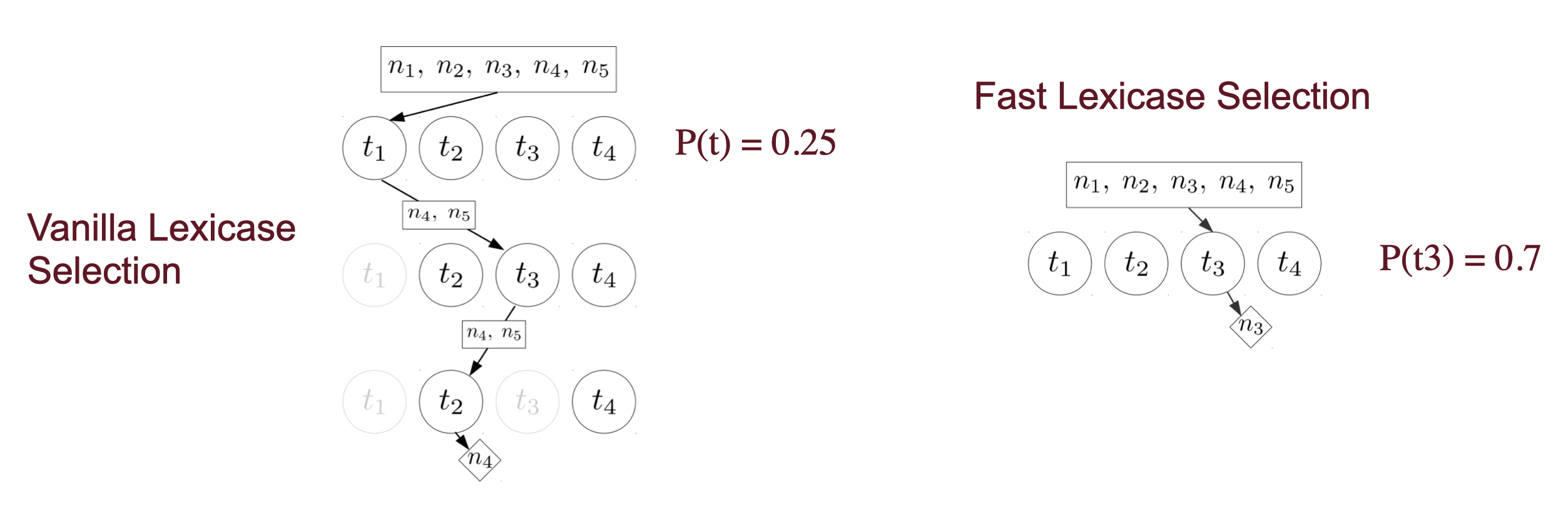

Going Faster and Hence Further with Lexicase Selection

Li Ding, Ryan Boldi, Thomas Helmuth, Lee Spector

GECCO 2022 (poster)

[paper]

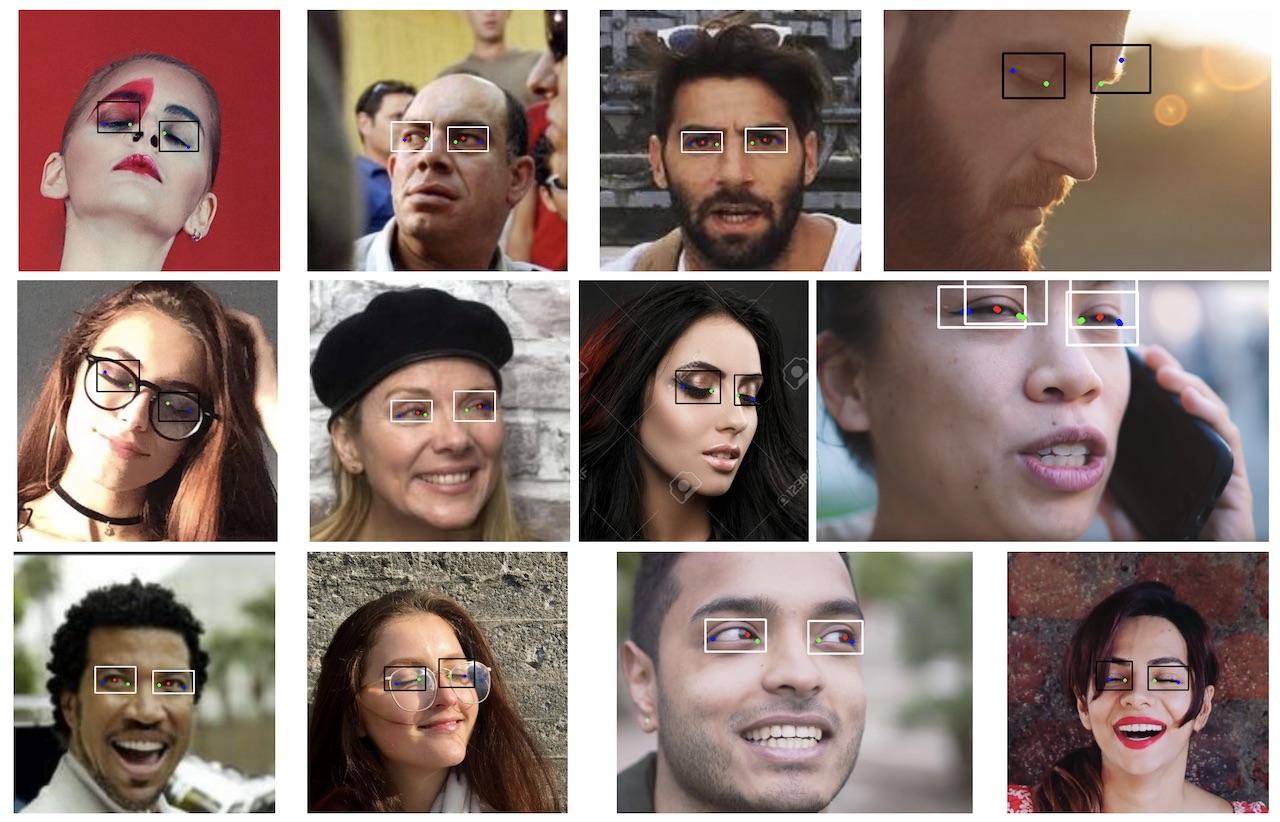

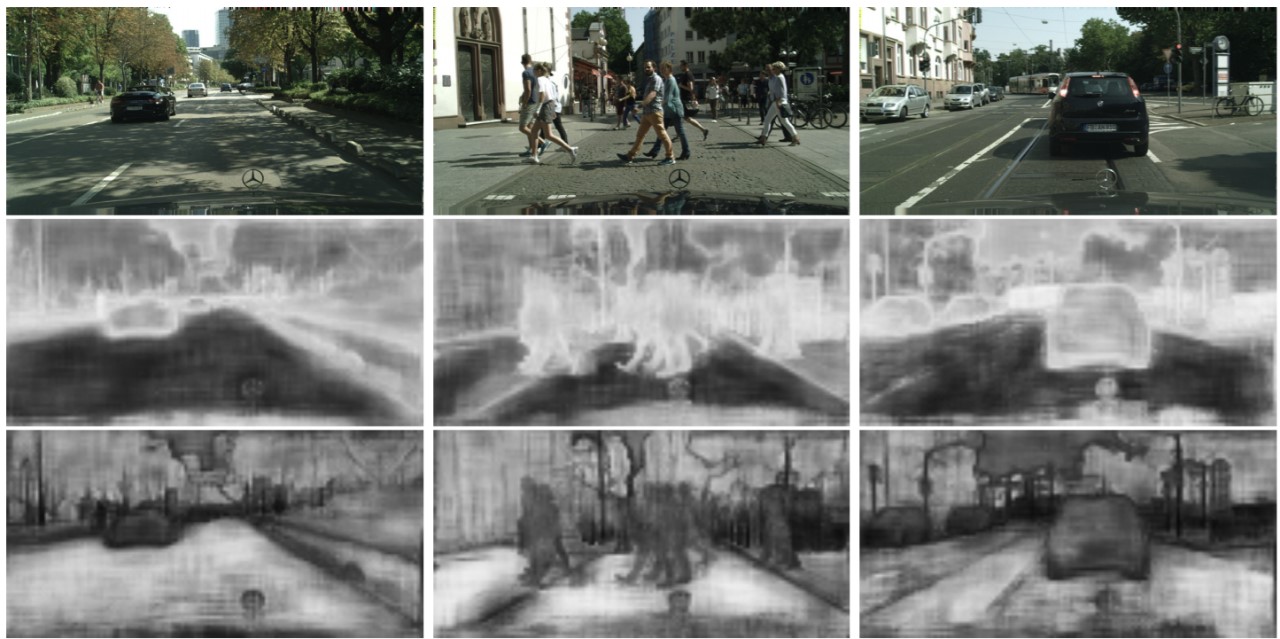

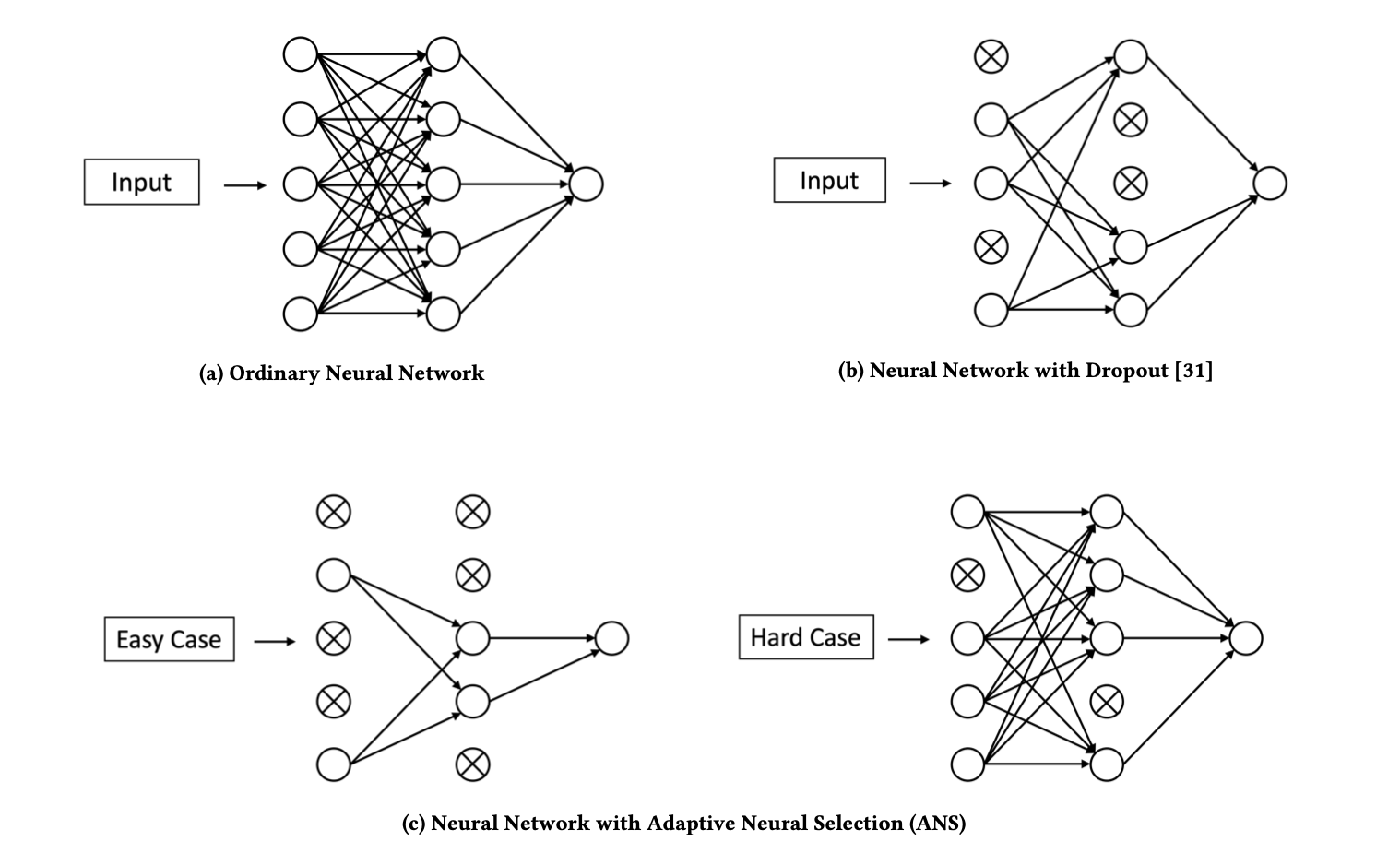

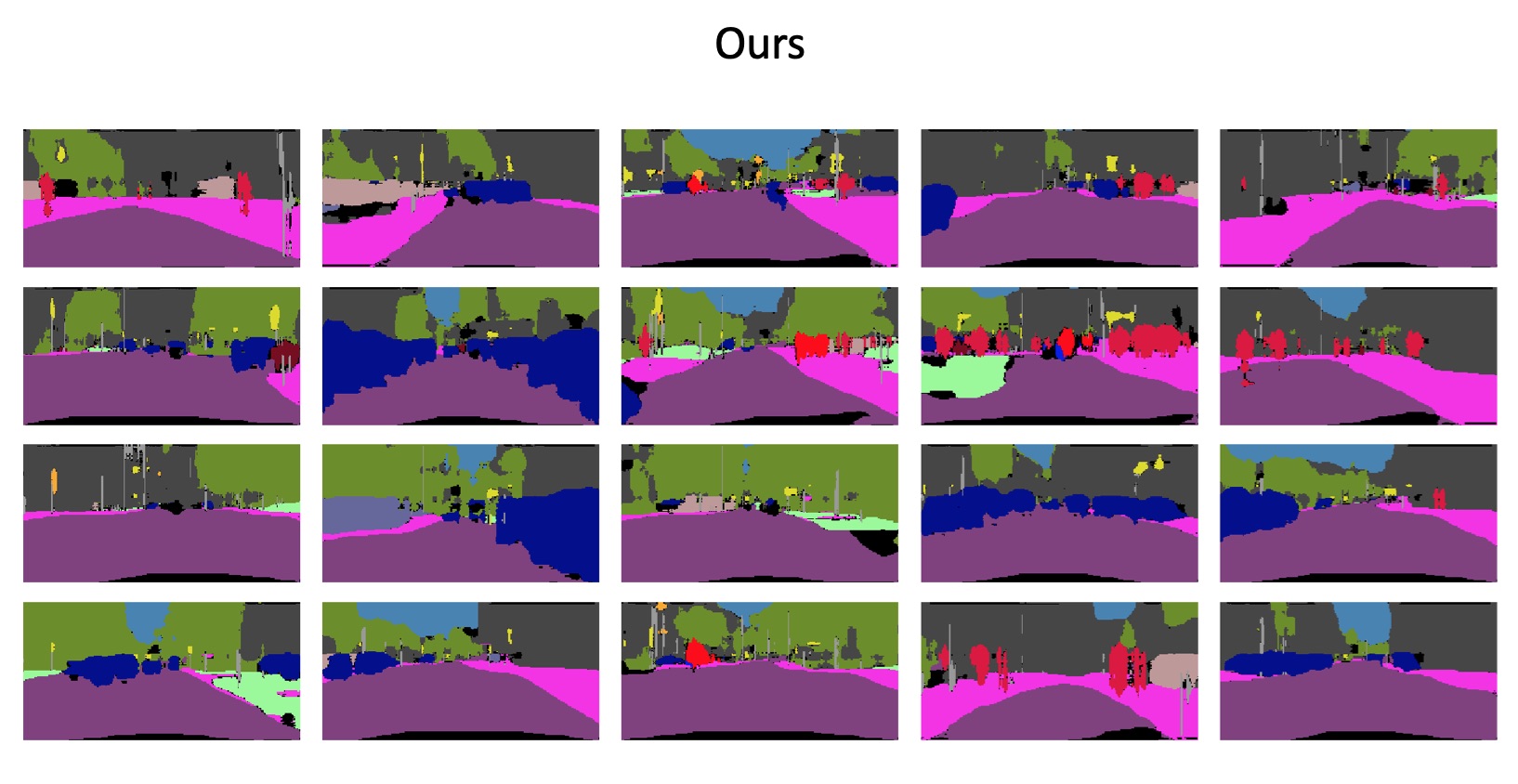

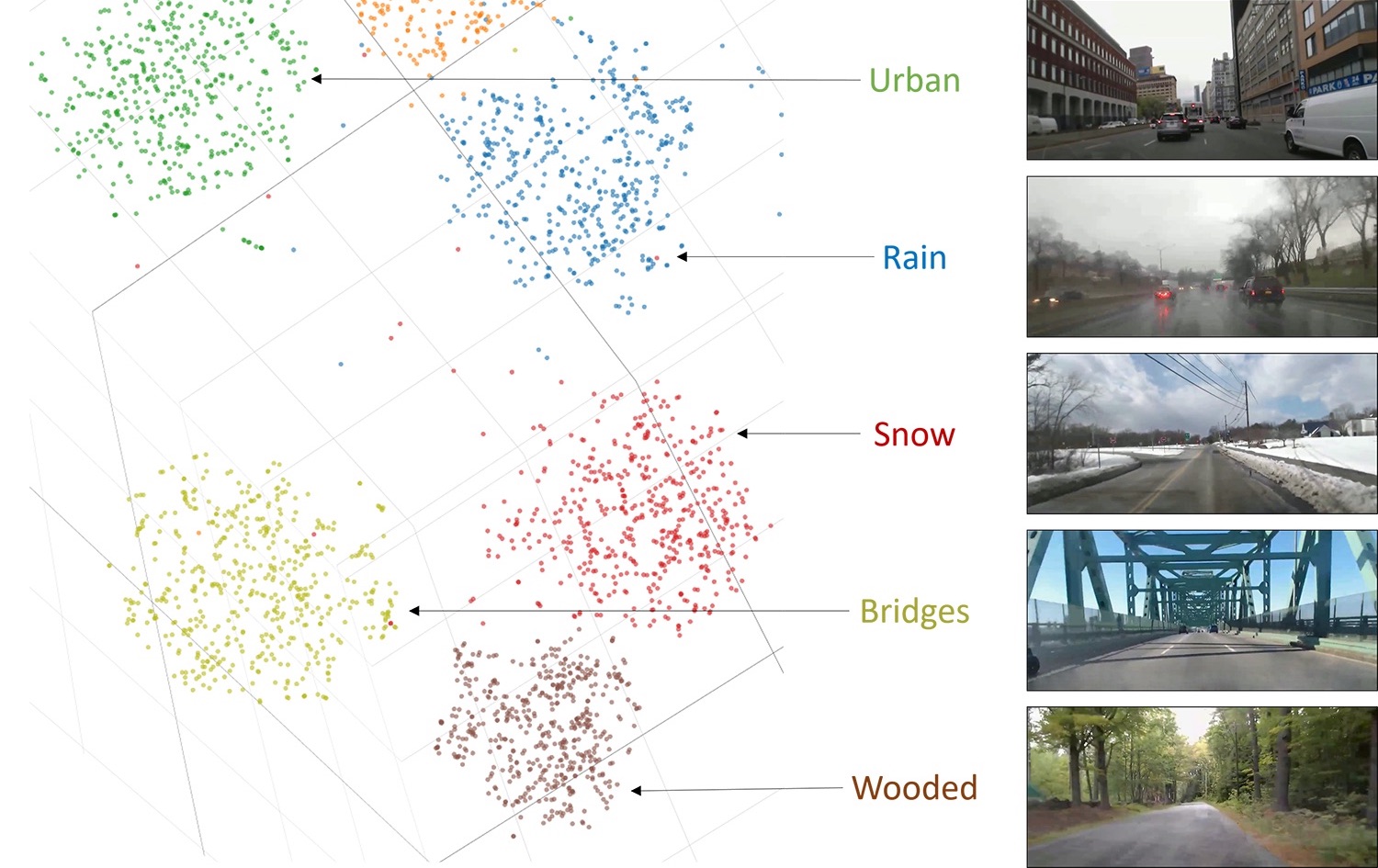

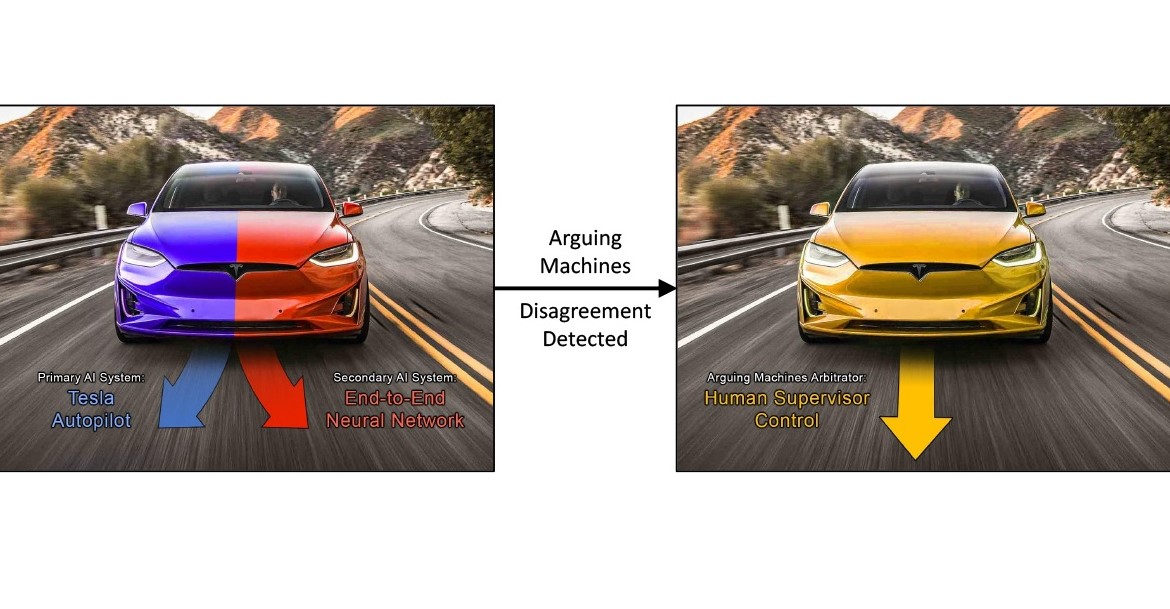

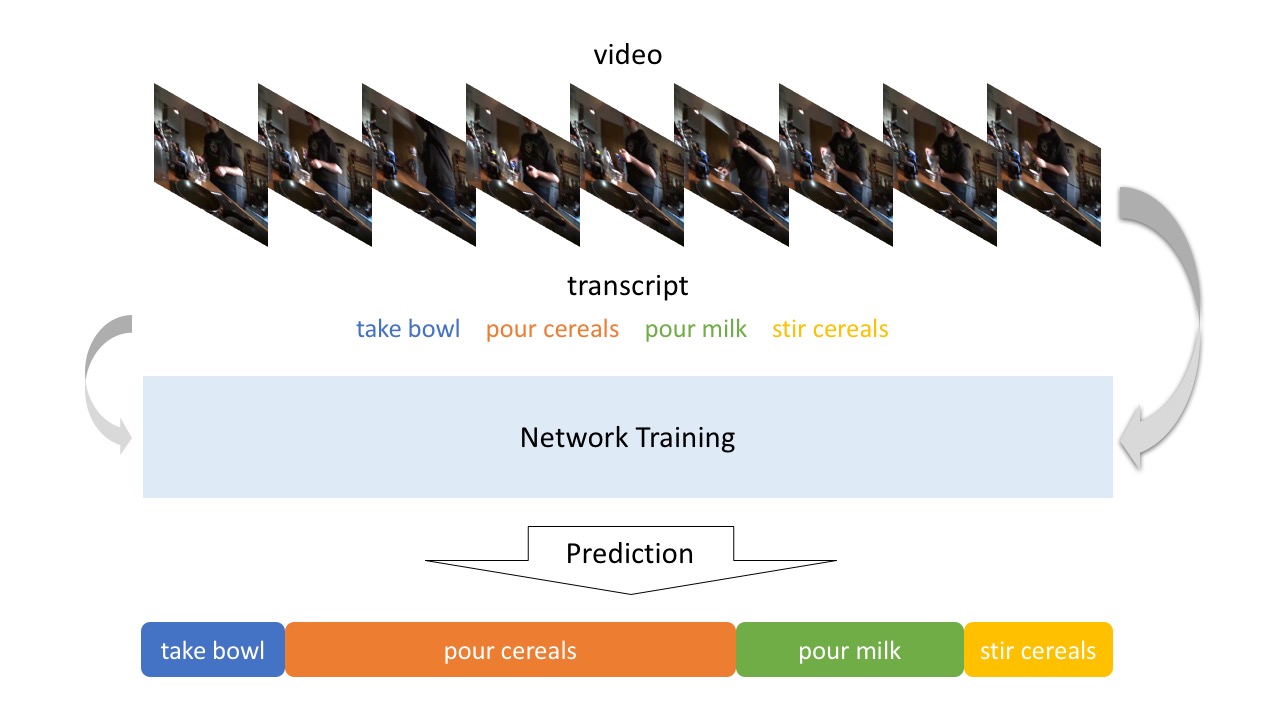

Value of Temporal Dynamics Information in Driving Scene Segmentation

Li Ding, Jack Terwilliger, Rini Sherony, Bryan Reimer, Lex Fridman

IEEE Transactions on Intelligent Vehicles, 2021

[paper]

[arXiv]

[MIT DriveSeg

Dataset]

Press coverage:

[MIT News]

[Forbes]

[InfoQ]

[TechCrunch]

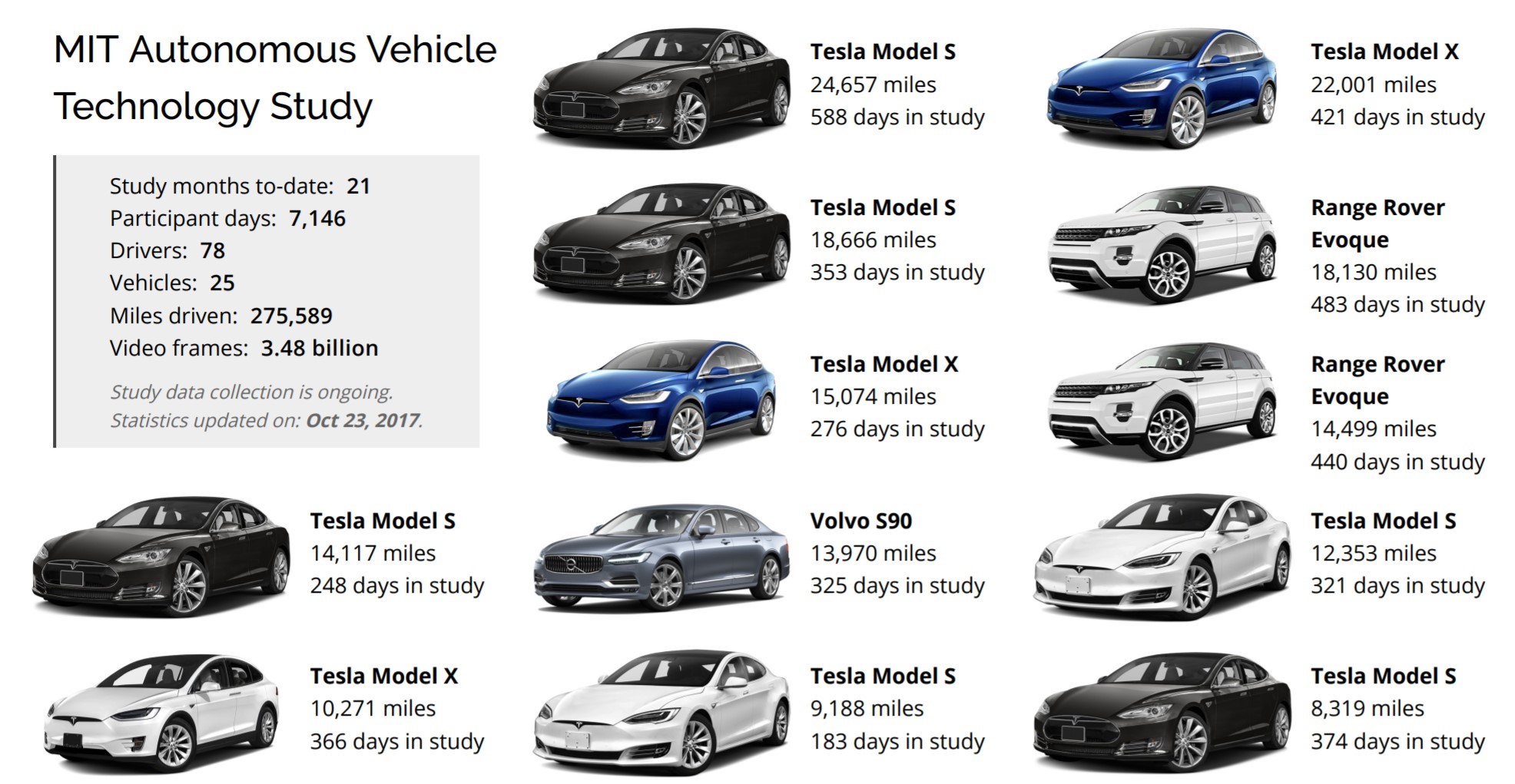

MIT Advanced Vehicle Technology Study:

Large-Scale Naturalistic Driving Study of

Driver Behavior and Interaction with Automation

Lex Fridman, Daniel E. Brown, Michael Glazer, William Angell, Spencer Dodd, Benedikt Jenik, Jack

Terwilliger, Julia Kindelsberger, Li Ding, Sean Seaman, Alea Mehler, Andrew Sipperley, Anthony

Pettinato, Bobbie Seppelt, Linda Angell, Bruce Mehler, Bryan Reimer

IEEE Access, 2019

[paper]

[arXiv]

[video]

Human Interaction with Deep Reinforcement

Learning Agents in Virtual Reality

Lex Fridman, Henri Schmidt, Jack Terwilliger, Li Ding

NeurIPS 2018: Deep RL Workshop

Misc.

Teaching:

TA for UMass COMPSCI 230:

Computer

Systems Principles (Summer 2021).

TA for MIT 6.S094: Deep Learning for Self-Driving

Cars

(Winter 2018-19).

TA for MIT 6.S099:

Artificial General Intelligence (Winter 2019).

Conference Reviewer:

ICLR 2024, AAAI 2024, NeurIPS 2023, ICCV 2023, CVPR 2023, IJCNN 2022, IV 2021-2023, BMVC 2020, 2021, 2023,

AutoUI 2020.

Journal Reviewer:

IEEE Transactions on Intelligent Vehicles, Quantum Machine Intelligence, Pattern Recognition, IEEE

Transactions on Circuits and Systems for Video Technology.

AI

Podcast:

Helped prepare interview questions, search for guest speakers, etc. for a

podcast hosted by Lex Fridman about technology, science, and the

human

condition.

(Ranked #1 on Apple Podcasts in the technology category, 1M views

on YouTube.)

(My personal favorite episode is Tomaso Poggio, highly

recommended!)

Robocar Workshop:

Instructor for a summer/winter workshop at MIT with Dr. Tom Bertalan to

college and high school

students on building and programming autonomous robocars.