Marvin Minsky,

"Memoir on Inventing the Confocal Scanning Microscope,"

Published in Scanning, vol.10 pp128-138, 1988

Editorial Note

In this issue, we carry an article which we invited Prof. Marvin Minsky to write about his invention of the confocal scanning microscope. This is not a question of recognizing priority for a scientific insight or discovery. It is much more a question of raising the problem of how it can be possible that such an immensely important idea can go unrecognized for such a very long period. It may possibly be the case that after more research we find that yet another person discovered the same idea. That does not matter. The fact is that Minsky invented such a microscope identical with the concept later developed extensively by Egger and Davidovits at Yale and by Shepherd and Wilson in Oxford and Brakenhoff and colleagues in Amsterdam etc. The circumstances are also remarkable in that Minsky only published his invention as a patent. Yet he not only built a microscope and made it work and it was the kind of prototype of which we would be proud but he showed it to a number of people who went away impressed but nevertheless failed to adopt the concept.

We have also secured a copy of Minsky's original letter to his patent agent which we reproduce verbatim to indicate the clarity with which he was able to describe the concept and the future potential. The original patent is also excellent reading, but that is quite freely available. We have only copied the figures from that publication.

A. Boyde

Memoir on Inventing the Confocal Scanning Microscope

Marvin Minsky

This is what I remember about inventing the confocal scanning microscope in 1955. It happened while I was making a transition between two other theoretical preoccupations and I have never thought back to that period until Alan Boyde suggested writing this memoir. When I read the following account, the plot seems more coherent now than it ever did in those times of the past. Perhaps, though, those activities which seemed to me the most spontaneous were actually those which unconsciously were managed the most methodically.

The story actually begins in childhood, for my father was an ophthalmologist and our home was simply full of lenses, prisms, and diaphragms. I took all his instruments apart, and he quietly put them together again. Later, when I was an undergraduate at Harvard in the class of 1950, there were new wonders every day. I studied mathematics with Andrew Gleason, neurophysiology with John Welsh, neuroanatomy with Marcus Singer, psychology with George Miller, and classical mechanics with Herbert Goldstein. But perhaps the most amazing experience of all was in a laboratory course wherein a student had to reproduce great physics experiments of the past. To ink a zone plate onto glass and see it focus on a screen; to watch a central fringe emerge as the lengths of two paths become the same; to measure those lengths to the millionth part with nothing but mirrors and beams of light; I had never seen any things so strange.

For graduate studies I moved to Princeton to study more mathematics and biology, and wrote a theoretical thesis on connectionistic learning machines - that is, on networks of devices based on what little was known about nerve cells. <As long as I can remember, I was entranced by all kinds of machinery -- and, early in my college years, tried to find out how the great machines that we call brains managed to feel and learn and think. I studied everything available about the physiology, anatomy, and embryology of the nervous system. But there simply were too many gaps; nothing was known about how brains learn. Nevertheless, it occurred to me, you might be able to figure that out - if only you knew how those brain cells were connected to each other. Then you could attempt some of what is now called "reverse engineering" -- to guess what those circuit's components do from knowing both what the circuits do and how their parts are connected. But I was horrified to learn that even those connection schemes had never been properly mapped at all. To be sure, a good deal was known about the shapes of certain types of nerve cells, because of the miraculous way in which the Golgi treatment tends to pick out a few neurons and then stain all the fibres that extend from them. But this permits you to visualize only one cell at a time, whereas to obtain the required wiring diagram you need to make visible all the cells in a three dimensional region. And here was a critical obstacle: the tissue of the central nervous system is solidly packed with interwoven parts of cells. Consequently, if you succeed in staining all of them, you simply can't see anything. This is not merely a problem of opacity because, if you put enough light in, some will come out. The serious problem is scattering. Unless you can confine each view to a thin enough plane, nothing comes out but a meaningless blur. Too little signal compared to the noise: the problem kept frustrating me.

After completing that doctoral thesis, I had the great fortune to be invited to become a Junior Fellow at Harvard. That three-year membership in the Harvard Society of Fellows carries unique privileges; there is no obligation to have students, responsibilities, or supervisors, and all doors to the university are opened; one is bound only by a simple oath to seek whatever seems the truth. This freedom was just what I needed then because I was making a change in course. With the instruments of the time so weak, there seemed little chance to understand brains, at least at the microscopic level. So, during those years I began to imagine another approach. Perhaps we could work the other way; begin with the large-scale things minds do and try to break those processes down into smaller and smaller ingredients. Perhaps such studies could help us to guess more about the low-level processes that might be found in brains. Then, perhaps we could combine what we learned from both "top down" and "bottom up" points of view - and eventually close in on the problem from two directions.

In the course of time, that new top down approach did indeed become productive; it soon assumed the fanciful name, Artificial Intelligence. But that is a different story, and the only part that is relevant here was what happened to me in that interlude. I now felt that while it might take decades to learn enough more about the brain, Artificial Intelligence could be tackled straight away - but my ideas about doing this were not yet quite mature enough. So (it seems to me in retrospect) while those ideas were incubating I had to keep my hands busy and solving that problem of scattered light became my conscious obsession. Edward Purcell, a Senior Fellow of the Society of Fellows, obtained for me a workroom in the Lyman laboratory of Physics, with a window facing Harvard Yard and permission to use whatever shops and equipment I might need. (That room had once been Theodore Lyman's office. Under an old sheet of shelf paper I found a bit of diffraction grating that had likely been ruled, I was awed to think, by the master spectroscopist himself.) One day it occurred to me that the way to avoid all that scattered light was to never allow any unnecessary light to enter in the first place.

An ideal microscope would examine each point of the specimen and measure the amount of light scattered or absorbed by that point. But if we try to make many such measurements at the same time then every focal image point will be clouded by aberrant rays of scattered light deflected points of the specimen that are not the point you're looking at. Most of those extra rays would be gone if we could illuminate only one specimen point at a time. There is no way to eliminate every possible such ray, because of multiple scattering, but it is easy to remove all rays not initially aimed at the focal point; just use a second microscope (instead of a condenser lens) to image a pinhole aperture on a single point of the specimen. This reduces the amount of light in the specimen by orders of magnitude without reducing the focal brightness at all. Still, some of the initially focused light will be scattered by out- of-focus specimen points onto other points in the image plane. But we can reject those rays, as well, by placing a second pinhole aperture in the image plane that lies beyond the exit side of the objective lens. We end up with an elegant, symmetrical geometry: a pinhole and an objective lens on each side of the specimen. (We could also employ a reflected light scheme by placing a single lens and pinhole on only one side of the specimen - and using a half-silvered mirror to separate the entering and exiting rays.) This brings an extra premium because the diffraction patterns of both pinhole apertures are multiplied coherently: the central peak is sharpened and the resolution is increased. (One can think of the lenses on both sides of the microscope combining, in effect, to form a single, larger lens, thus increasing the difference in light path lengths for point-pairs in the object plane.)

The price of single-point illumination is being able to measure only one point at a time. This is why a confocal microscope must scan the specimen, point by point and that can take a long time because we must add all the time intervals it takes to collect enough light to measure each image point. That amount of time could be reduced by using a brighter light - but there were no lasers in those days. I began by using a carbon arc, the brightest source available. Maintaining this was such a chore that I had to replace it by a second best source: zirconium arcs, though less intense, were a great deal more dependable. The output was measured with a low noise photomultiplier circuit that Francis Pipkin helped me design. Finally, the image was reconstructed on the screen of a military surplus long-persistence radar scope. The image remained visible for about ten seconds, which was also how long it took to make each scan.

The most serious design problem was choosing between moving the specimen or moving the beam. So far as I know, all modern confocal microscopes use moving mirrors or scanning disks. At first it seemed more elegant to deflect a weightless beam of light than to move a massive specimen. But daunted by the problem of maintaining the three-dimensional alignment of two tiny moving apertures, I decided that it would be easier to keep the optics fixed and move the stage. I also was reluctant to use the single-lens reflected light scheme because of wanting to "see" the image right away! (Not only would dark field be inherently dimmer, but there would also be the fourfold brightness loss that beam splitters always bring.) The modern machines do use the single-objective reflected light scheme. A more patient scientist would have accepted longer exposure times and assembled the pictures as photographs - which would have produced permanent records rather than transient subjective impressions. In retrospect it occurs to me that this concern for real-time speed may have been what delayed the use of this scheme for almost thirty years. I demonstrated the confocal microscope to many visitors, but they never seemed very much impressed with what they saw on that radar screen. Only later did I realize that it is not enough for an instrument merely to have a high resolving power; one must also make the image look sharp. Perhaps the human brain requires a certain degree of foveal compression in order to engage its foremost visual abilities. In any case, I should have used film - or at least have installed a smaller screen!

In any case, once I decided to move the stage, this was not hard to accomplish. The specimen was mounted between two cover slips and attached to a flexible platform that was supported by two strips of spring metal. A simple magnetic solenoid flexed the platform vertically with a 60-hertz sinusoidally waveform, while a similar device deflected the platform horizontally with a much slower, sawtooth waveform. The same electric signals (with some blanking and some corrections in phase) also scanned the image onto the screen. Thus the stage-moving system was little more complex than an orthogonal pair of tuning forks. The optical system was not hard to align and proved able to resolve points closer than a micrometer apart, using 45x objectives in air. I never got around to using oil immersion for fear that it would restrict the depth to which different focal planes could be examined, and because the viscosity might constrain the size of scan or tear apart the specimen.

There is also a theoretical advantage to moving the stage rather than the beam: the lenses of such a system need to be corrected only for the family of rays that intersect the optical axis at a single focal point. In principle, that could lead to better lens designs because such systems need no corrections at all for lateral aberrations. In practice, however, for visible light, opticians can already make wide field lenses that approach theoretical perfection. (This was another thing about optics I had always found astonishing: the mathematical way in which the radial symmetry of a lens causes odd order terms of series expansions to cancel out, so that you can obtain sixth order accuracy by making only two kinds of corrections, of second and fourth order. It almost seems too good to be true that such simple combinations of spherical surfaces - the very shapes that are the easiest to fabricate - can transform entire four-dimensional families of rays in such orderly ways.) However, the advantages of combining stage scanning with paraxial optics could still turn out to be indispensable, for example, for microscopes in the X-ray domain for which refractive lenses and half- silvered mirrors may never turn out to be feasible.

In constructing the actual prototype, the electronic aspects seemed easy enough because, a few years earlier, I had already built a learning machine (to simulate those neuronal nets) - and that system contained several hundred vacuum tube circuits. But the world of machining was new to me. Constructing an optical instrument was to live in a world where the critical issue of each day was how to clamp some bar of steel to the baseplate of a milling machine, what sort of cutter and speed to use, and how to keep the workpiece cool. I became obsessed with finding ways to reduce the thermal expansion under the wheel of a grinding machine; no matter how flat a surface seemed, I'd find new bumps the following day. (Perhaps I was haunted by Lyman's ghost.) By the time the prototype was complete, I understood how the principles of kinematic design had made most of that precision unnecessary. I could have saved months. Still, the machine shop experience was not wasted. A decade later, it helped me to build a singularly versatile robotic arm and hand.

Scanning is far more practical today because we can use computers to transform and enhance the images. In those days, computers were just becoming available and my friend Russell Kirsch was already doing some of the first experiments on image analysis. He persuaded me to try some experiments, using the SEAC computer at the Bureau of Standards. However, that early machine's memory was too small for those images, and we did not yet have adequate devices for digitizing the signals. Subsequently years, both Kirsch and I continued to pursue those same ideas - of closing in on the vision problem by combining bottom-up concepts of feature extraction with top-down theories about the syntactic and semantic structures of images. Eventually, Kirsch applied those techniques to "parsing" pictures of actual cells, while I pursued the subject of making computers recognize more commonplace sorts of things. I should mention that I was also working with George Field (who also helped with the microscope design) on how to use computers to enhance astronomical images. Such schemes later became practical but at that time they, too, were defeated by the cost of memory. I returned to physical optics only once more, in the middle 1960s, in building computer controlled scanners for our mechanical robotics project and in studying the feasibility of using somewhat similar systems in conjunction with radiation therapy.

I also pursued another dream - of a microscope, not optical, but entirely mechanical. Perhaps there were structures that could not be seen - because they could not be selectively stained. What, for example, served to hold the nucleus away from the walls of a cell? Perhaps there was a scaffold of invisible fibres that one might recognize by plucking them - and then measure the strain, or see other things move. I examined the various micromanipulators that already existed but, finding none that seemed suitable, I designed one which I hoped to use in conjunction with my new microscope. Again, the Society of Fellows came to my aid, this time in the person of Carroll Williams, who invited me to build it in his laboratory. The new micromanipulator was extremely simple: I mounted the voice coils of three loudspeakers at right angles and connected them with stiff wires to a diagonally mounted needle probe. The needle could be moved in any spatial direction, simply by changing the current in the three coils. The only hard part was replacing the coil suspensions with materials free from mechanical hysteresis. The resulting probe could be swiftly moved with precision better than 100 nanometers, over a range of more than a millimeter. (This sensitivity was at first limited by power supply noise. This was solved by using batteries.) To control the probe, my childhood classmate Edward Feder, who was now also working in Williams' laboratory, constructed a three-dimensional electrical joystick by attaching three conductive sheets to the sides of a tank of salt water. Everyone seemed to like this instrument, so we left it around in the laboratory, but it was never actually put to use, and I have no idea what became of it. I had planned to measure the infinitesimal forces by applying very high frequency vibrations to a microelectrode mounted on the probe and correlating the waveforms against the needle deflections as observed through the scanning microscope. I never got around to this because, by 1956, AI was already on the march.

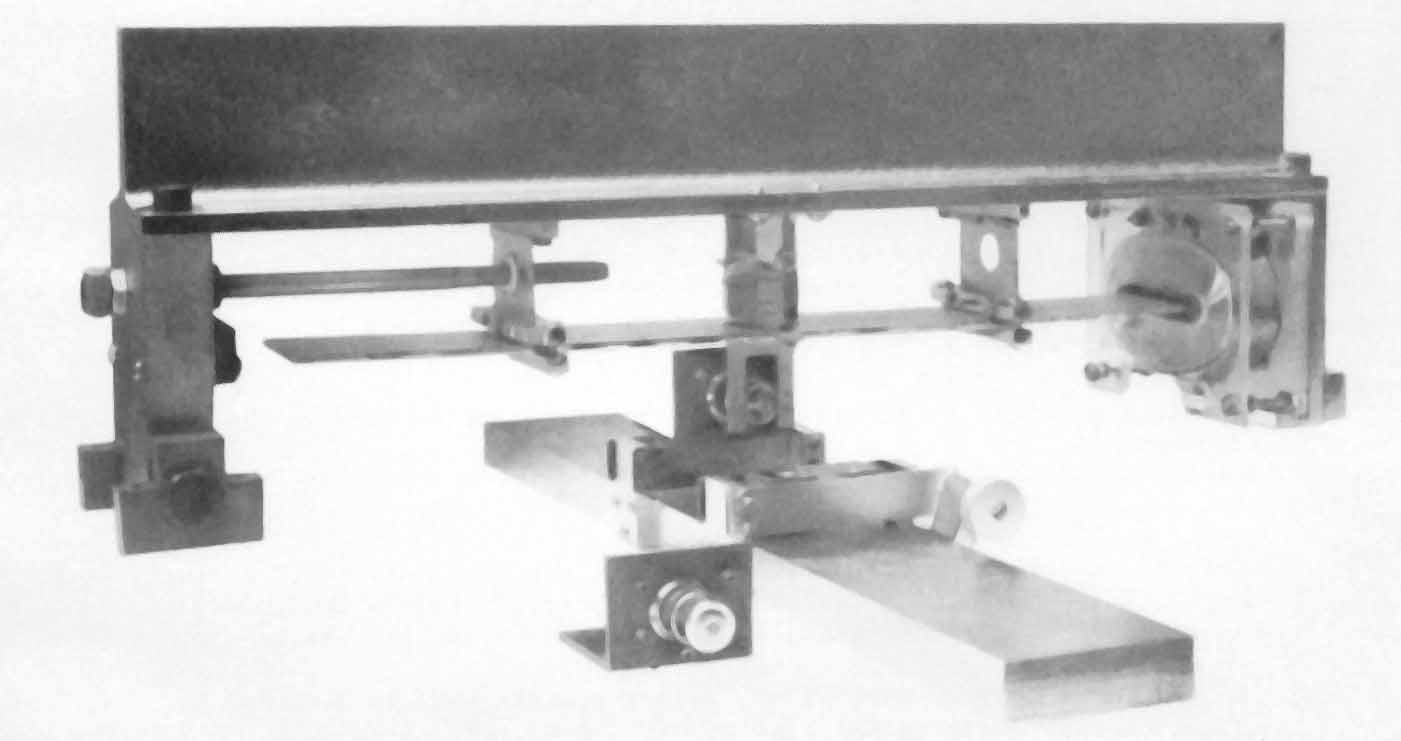

This is what I remember now, and it may not all be accurate. I've never had much conscious sense of making careful, long range plans, but have simply worked from day to day without keeping notes or schedules, or writing down the things I did. I never published anything about that earliest learning machine, or about the micromanipulator, or even about that robot arm. In the case of the scanning microscope, it was fortunate that my brother in law, Morton Amster, not only liked the instrument but also happened to be a patent attorney. Otherwise I might have never documented it at all. The learning machine and the micromanipulator disappeared long ago but, only today, while writing this, I managed to find the microscope, encrusted with thirty years of rust. I cleaned it up, took this photograph, and started to write an appropriate caption - but then found the right thing in a carbon copy of a letter to Amster dated November 18, 1955.

Photo of the prototype.

First page of patent.