UI on the Fly: Generating Multimodal Interactions

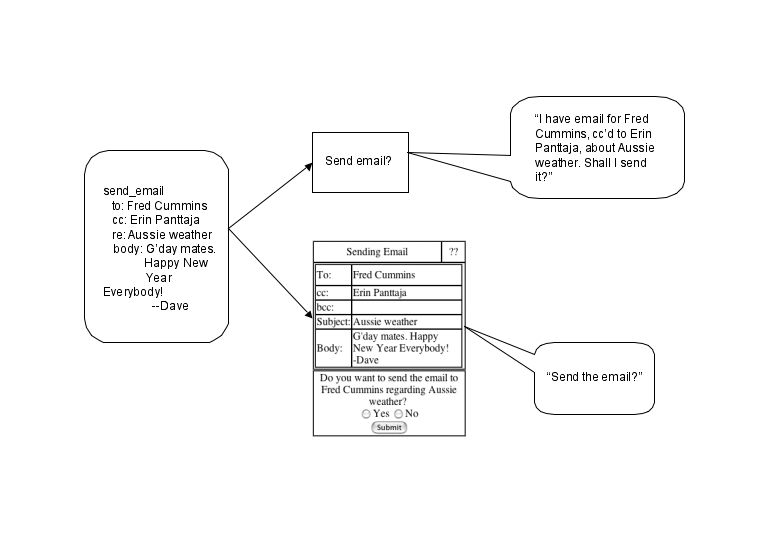

David Reitter, Erin Panttaja, Fred CumminsWe are developing a technique that allows a computer to automatically generate multimodal user interfaces, in particular for small computers such as cell phones or iPAQs. We enable these devices to engage in natural language conversation, using screen and voice output, and touch-screen and voice input at the same time. The output is tailored to the particular usage situation (in a restaurant, in the car, at home), as well as to the device and preferences of the user. The central system can thus remain blissfully agnostic as you switch from using a phone, to a PDA, to a computer, and back.

|

|

Content Coordination between the modes allows users and the system to use voice and touchscreen together. Natural Language helps inexperienced, mobile, or handicapped users to gain access to the system. Maximum Utility Modulation customizes the output, so that it is as easy to understand as possible and as efficient as communication resources permit. Dynamic Adaptation changes the interaction based on context, the user's abilities, and user preferences. |

Projects:

- MUG Workbench is a system for generating different kinds of multimodal interactions.

- FASiL is an EU project to create an adaptive multimodal user interface.

- Multimodal Centering is a theory that generates user interfaces, which use pronouns and other referring expressions.

| [to Adaptive Speech Interfaces] | [To MLE] | [To UCD] | [To Fred Cummins's Homepage] |